It was a weeknight, after dinner, and the baby was in bed. My wife and I were alone—we thought—discussing the sorts of things you might discuss with your spouse and no one else. (Specifically, we were critiquing a friend’s taste in romantic partners.) I was midsentence when, without warning, another woman’s voice piped in from the next room. We froze.

“I HELD THE DOOR OPEN FOR A CLOWN THE OTHER DAY,” the woman said in a loud, slow monotone. It took us a moment to realize that her voice was emanating from the black speaker on the kitchen table. We stared slack-jawed as she—it—continued: “I THOUGHT IT WAS A NICE JESTER.”

“What. The hell. Was that,” I said after a moment of stunned silence. Alexa, the voice assistant whose digital spirit animates the Amazon Echo, did not reply. She—it—responds only when called by name. Or so we had believed.

We pieced together what must have transpired. Somehow, Alexa’s speech recognition software had mistakenly picked the word Alexa out of something we said, then chosen a phrase like “tell me a joke” as its best approximation of whatever words immediately followed. Through some confluence of human programming and algorithmic randomization, it chose a lame jester/gesture pun as its response.

In retrospect, the disruption was more humorous than sinister. But it was also a slightly unsettling reminder that Amazon’s hit device works by listening to everything you say, all the time. And that, for all Alexa’s human trappings—the name, the voice, the conversational interface—it’s no more sentient than any other app or website. It’s just code, built by some software engineers in Seattle with a cheesy sense of humor.

But the Echo’s inadvertent intrusion into an intimate conversation is also a harbinger of a more fundamental shift in the relationship between human and machine. Alexa—and Siri and Cortana and all of the other virtual assistants that now populate our computers, phones, and living rooms—are just beginning to insinuate themselves, sometimes stealthily, sometimes overtly, and sometimes a tad creepily, into the rhythms of our daily lives. As they grow smarter and more capable, they will routinely surprise us by making our lives easier, and we’ll steadily become more reliant on them.

Even as many of us continue to treat these bots as toys and novelties, they are on their way to becoming our primary gateways to all sorts of goods, services, and information, both public and personal. When that happens, the Echo won’t just be a cylinder in your kitchen that sometimes tells bad jokes. Alexa and virtual agents like it will be the prisms through which we interact with the online world.

It’s a job to which they will necessarily bring a set of biases and priorities, some subtler than others. Some of those biases and priorities will reflect our own. Others, almost certainly, will not. Those vested interests might help to explain why they seem so eager to become our friends.

* * *

AP

AP

In the beginning, computers spoke only computer language, and a human seeking to interact with one was compelled to do the same. First came punch cards, then typed commands such as run, print, and dir.

The 1980s brought the mouse click and the graphical user interface to the masses; the 2000s, touch screens; the 2010s, gesture control and voice. It has all been leading, gradually and imperceptibly, to a world in which we no longer have to speak computer language, because computers will speak human language—not perfectly, but well enough to get by.

Alexa and software agents like it will be the prisms through which we interact with the online world.

We aren’t there yet. But we’re closer than most people realize. And the implications—many of them exciting, some of them ominous—will be tremendous.

Like card catalogs and AOL-style portals before it, Web search will begin to fade from prominence, and with it the dominance of browsers and search engines. Mobile apps as we know them—icons on a home screen that you tap to open—will start to do the same. In their place will rise an array of virtual assistants, bots, and software agents that act more and more like people: not only answering our queries, but acting as our proxies, accomplishing tasks for us, and asking questions of us in return.

This is already beginning to happen—and it isn’t just Siri or Alexa. As of April, all five of the world’s dominant technology companies are vying to be the Google of the conversation age. Whoever wins has a chance to get to know us more intimately than any company or machine has before—and to exert even more influence over our choices, purchases, and reading habits than they already do.

So say goodbye to Web browsers and mobile home screens as our default portals to the Internet. And say hello to the new wave of intelligent assistants, virtual agents, and software bots that are rising to take their place.

No, really, say “hello” to them. Apple’s Siri, Google’s mobile search app, Amazon’s Alexa, Microsoft’s Cortana, and Facebook’s M, to name just five of the most notable, are diverse in their approaches, capabilities, and underlying technologies. But, with one exception, they’ve all been programmed to respond to basic salutations in one way or another, and it’s a good way to start to get a sense of their respective mannerisms. You might even be tempted to say they have different personalities.

Siri’s response to “hello” varies, but it’s typically chatty and familiar:

Slate/Screenshot

Slate/Screenshot

Alexa is all business:

Slate/Screenshot

Slate/Screenshot

Google is a bit of an idiot savant: It responds by pulling up a YouTube video of the song “Hello” by Adele, along with all the lyrics.

Slate/Screenshot

Slate/Screenshot

Cortana isn’t interested in saying anything until you’ve handed her the keys to your life:

Slate/Screenshot

Slate/Screenshot

Once those formalities are out of the way, she’s all solicitude:

Slate/Screenshot

Slate/Screenshot

Then there’s Facebook M, an experimental bot, available so far only to an exclusive group of Bay Area beta-testers, that lives inside Facebook Messenger and promises to answer almost any question and fulfill almost any (legal) request. If the casual, what’s-up-BFF tone of its text messages rings eerily human, that’s because it is: M is powered by an uncanny pairing of artificial intelligence and anonymous human agents.

Slate/Screenshot

Slate/Screenshot

You might notice that most of these virtual assistants have female-sounding names and voices. Facebook M doesn’t have a voice—it’s text-only—but it was initially rumored to be called Moneypenny, a reference to a secretary from the James Bond franchise. And even Google’s voice is female by default. This is, to some extent, a reflection of societal sexism. But these bots’ apparent embrace of gender also highlights their aspiration to be anthropomorphized: They want—that is, the engineers that build them want—to interact with you like a person, not a machine. It seems to be working: Already people tend to refer to Siri, Alexa, and Cortana as “she,” not “it.”

That Silicon Valley’s largest tech companies have effectively humanized their software in this way, with little fanfare and scant resistance, represents a coup of sorts. Once we perceive a virtual assistant as human, or at least humanoid, it becomes an entity with which we can establish humanlike relations. We can like it, banter with it, even turn to it for companionship when we’re lonely. When it errs or betrays us, we can get angry with it and, ultimately, forgive it. What’s most important, from the perspective of the companies behind this technology, is that we trust it.

Should we?

* * *

Siri wasn’t the first digital voice assistant when Apple introduced it in 2011, and it may not have been the best. But it was the first to show us what might be possible: a computer that you talk to like a person, that talks back, and that attempts to do what you ask of it without requiring any further action on your part. Adam Cheyer, co-founder of the startup that built Siri and sold it to Apple in 2010, has said he initially conceived of it not as a search engine, but as a “do engine.”

If Siri gave us a glimpse of what is possible, it also inadvertently taught us about what wasn’t yet. At first, it often struggled to understand you, especially if you spoke into your iPhone with an accent, and it routinely blundered attempts to carry out your will. Its quick-witted rejoinders to select queries (“Siri, talk dirty to me”) raised expectations for its intelligence that were promptly dashed once you asked it something it hadn’t been hard-coded to answer. Its store of knowledge proved trivial compared with the vast information readily available via Google search. Siri was as much an inspiration as a disappointment.

Five years later, Siri has gotten smarter, if perhaps less so than one might have hoped. More importantly, the technology underlying it has drastically improved, fueled by a boom in the computer science subfield of machine learning. That has led to sharp improvements in speech recognition and natural language understanding, two separate but related technologies that are crucial to voice assistants.

Reuters/Suzanne PlunkettLuke Peters demonstrates Siri, an application which uses voice recognition and detection on the iPhone 4S, outside the Apple store in Covent Garden, London Oct. 14, 2011.

Reuters/Suzanne PlunkettLuke Peters demonstrates Siri, an application which uses voice recognition and detection on the iPhone 4S, outside the Apple store in Covent Garden, London Oct. 14, 2011.

If Siri gave us a glimpse of what is possible, it also inadvertently taught us about what wasn’t yet.

If a revolution in technology has made intelligent virtual assistants possible, what has made them inevitable is a revolution in our relationship to technology. Computers began as tools of business and research, designed to automate tasks such as math and information retrieval. Today they’re tools of personal communication, connecting us not only to information but to one another. They’re also beginning to connect us to all the other technologies in our lives: Your smartphone can turn on your lights, start your car, activate your home security system, and withdraw money from your bank. As computers have grown deeply personal, our relationship with them has changed. And yet the way they interact with us hasn’t quite caught up.

“It’s always been sort of appalling to me that you now have a supercomputer in your pocket, yet you have to learn to use it,” says Alan Packer, head of language technology at Facebook. “It seems actually like a failure on the part of our industry that software is hard to use.”

Packer is one of the people trying to change that. As a software developer at Microsoft, he helped to build Cortana. After it launched, he found his skills in heavy demand, especially among the two tech giants that hadn’t yet developed voice assistants of their own. One Thursday morning in December 2014, Packer was on the verge of accepting a top job at Amazon—“You would not be surprised at which team I was about to join,” he says—when Facebook called and offered to fly him to Menlo Park, California, for an interview the next day. He had an inkling of what Amazon was working on, but he had no idea why Facebook might be interested in someone with his skill set.

As it turned out, Facebook wanted Packer for much the same purpose that Microsoft and Amazon did: to help it build software that could make sense of what its users were saying and generate intelligent responses. Facebook may not have a device like the Echo or an operating system like Windows, but its own platforms are full of billions of people communicating with one another every day. If Facebook can better understand what they’re saying, it can further hone its News Feed and advertising algorithms, among other applications. More creatively, Facebook has begun to use language understanding to build artificial intelligence into its Messenger app. Now, if you’re messaging with a friend and mention sharing an Uber, a software agent within Messenger can jump in and order it for you while you continue your conversation.

In short, Packer says, Facebook is working on language understanding because Facebook is a technology company—and that’s where technology is headed. As if to underscore that point, Packer’s former employer this year headlined its annual developer conference by announcing plans to turn Cortana into a portal for conversational bots and integrate it into Skype, Outlook, and other popular applications. Microsoft CEO Satya Nadella predicted that bots will be the Internet’s next major platform, overtaking mobile apps the same way they eclipsed desktop computing.

* * * AP

AP

Siri may not have been very practical, but people immediately grasped what it was. With Amazon’s Echo, the second major tech gadget to put a voice interface front and center, it was the other way around. The company surprised the industry and baffled the public when it released a device in November 2014 that looked and acted like a speaker—except that it didn’t connect to anything except a power outlet, and the only buttons were for power and mute. You control the Echo solely by voice, and if you ask it questions, it talks back. It was like Amazon had decided to put Siri in a black cylinder and sell it for $179. Except Alexa, the virtual intelligence software that powers the Echo, was far more limited than Siri in its capabilities. Who, reviewers wondered, would buy such a bizarre novelty gadget?

That question has faded as Amazon has gradually upgraded and refined the Alexa software, and the five-star Amazon reviews have since poured in. In the New York Times, Farhad Manjoo recently followed up his tepid initial review with an all-out rave: The Echo “brims with profound possibility,” he wrote. Amazon has not disclosed sales figures, but the Echo ranks as the third-best-selling gadget in its electronics section. Alexa may not be as versatile as Siri—yet—but it turned out to have a distinct advantage: a sense of purpose, and of its own limitations. Whereas Apple implicitly invites iPhone users to ask Siri anything, Amazon ships the Echo with a little cheat sheet of basic queries that it knows how to respond to: “Alexa, what’s the weather?” “Alexa, set a timer for 45 minutes.” “Alexa, what’s in the news?”

The cheat sheet’s effect is to lower expectations to a level that even a relatively simplistic artificial intelligence can plausibly meet on a regular basis. That’s by design, says Greg Hart, Amazon’s vice president in charge of Echo and Alexa. Building a voice assistant that can respond to every possible query is “a really hard problem,” he says. “People can get really turned off if they have an experience that’s subpar or frustrating.” So the company began by picking specific tasks that Alexa could handle with aplomb and communicating those clearly to customers.

At launch, the Echo had just 12 core capabilities. That list has grown steadily as the company has augmented Alexa’s intelligence and added integrations with new services, such as Google Calendar, Yelp reviews, Pandora streaming radio, and even Domino’s delivery. The Echo is also becoming a hub for connected home appliances: “ ‘Alexa, turn on the living room lights’ never fails to delight people,” Hart says.

When you ask Alexa a question it can’t answer or say something it can’t quite understand, it fesses up: “Sorry, I don’t know the answer to that question.” That makes it all the more charming when you test its knowledge or capabilities and it surprises you by replying confidently and correctly. “Alexa, what’s a kinkajou?” I asked on a whim one evening, glancing up from my laptop while reading a news story about an elderly Florida woman who woke up one day with a kinkajou on her chest. Alexa didn’t hesitate: “A kinkajou is a rainforest mammal of the family Procyonidae … ” Alexa then proceeded to list a number of other Procyonidae to which the kinkajou is closely related. “Alexa, that’s enough,” I said after a few moments, genuinely impressed. “Thank you,” I added.

“You’re welcome,” Alexa replied, and I thought for a moment that she—it—sounded pleased.

As delightful as it can seem, the Echo’s magic comes with some unusual downsides. In order to respond every time you say “Alexa,” it has to be listening for the word at all times. Amazon says it only stores the commands that you say after you’ve said the word Alexa and discards the rest. Even so, the enormous amount of processing required to listen for a wake word 24/7 is reflected in the Echo’s biggest limitation: It only works when it’s plugged into a power outlet. (Amazon’s newest smart speakers, the Echo Dot and the Tap, are more mobile, but one sacrifices the speaker and the other the ability to respond at any time.)

Even if you trust Amazon to rigorously protect and delete all of your personal conversations from its servers—as it promises it will if you ask it to—Alexa’s anthropomorphic characteristics make it hard to shake the occasional sense that it’s eavesdropping on you, Big Brother–style. I was alone in my kitchen one day, unabashedly belting out the Fats Domino song “Blueberry Hill” as I did the dishes, when it struck me that I wasn’t alone after all. Alexa was listening—not judging, surely, but listening all the same. Sheepishly, I stopped singing.

* * * Google Images

Google Images

The notion that the Echo is “creepy” or “spying on us” might be the most common criticism of the device so far. But there’s a more fundamental problem. It’s one that is likely to haunt voice assistants, and those who rely on them, as the technology evolves and bores it way more deeply into our lives.

The problem is that conversational interfaces don’t lend themselves to the sort of open flow of information we’ve become accustomed to in the Google era. By necessity they limit our choices—because their function is to make choices on our behalf.

For example, a search for “news” on the Web will turn up a diverse and virtually endless array of possible sources, from Fox News to Yahoo News to CNN to Google News, which is itself a compendium of stories from other outlets. But ask the Echo, “What’s in the news?” and by default it responds by serving up a clip of NPR News’s latest hourly update, which it pulls from the streaming radio service TuneIn. Which is great—unless you don’t happen to like NPR’s approach to the news, or you prefer a streaming radio service other than TuneIn. You can change those defaults somewhere in the bowels of the Alexa app, but Alexa never volunteers that information. Most people will never even know it’s an option. Amazon has made the choice for them.

And how does Amazon make that sort of choice? The Echo’s cheat sheet doesn’t tell you that, and the company couldn’t give me a clear answer.

Alexa does take care to mention before delivering the news that it’s pulling the briefing from NPR News and TuneIn. But that isn’t always the case with other sorts of queries.

Let’s go back to our friend the kinkajou. In my pre-Echo days, my curiosity about an exotic animal might have sent me to Google via my laptop or phone. Just as likely, I might have simply let the moment of curiosity pass and not bothered with a search. Looking something up on Google involves just enough steps to deter us from doing it in a surprising number of cases. One of the great virtues of voice technology is to lower that barrier to the point where it’s essentially no trouble at all. Having an Echo in the room when you’re struck by curiosity about kinkajous is like having a friend sitting next to you who happens to be a kinkajou expert. All you have to do is say your question out loud, and Alexa will supply the answer. You literally don’t have to lift a finger.

That is voice technology’s fundamental advantage over all the human-computer interfaces that have come before it: In many settings, including the home, the car, or on a wearable gadget, it’s much easier and more natural than clicking, typing, or tapping. In the logic of today’s consumer technology industry, that makes its ascendance in those realms all but inevitable.

But consider the difference between Googling something and asking a friendly voice assistant. When I Google “kinkajou,” I get a list of websites, ranked according to an algorithm that takes into account all sorts of factors that correlate with relevance and authority. I choose the information source I prefer, then visit its website directly—an experience that could help to further shade or inform my impression of its trustworthiness. Ultimately, the answer does come not from Google, per se, but directly from some third-party authority, whose credibility I can evaluate as I wish.

A voice-based interface is different. The response comes one word at a time, one sentence at a time, one idea at a time. That makes it very easy to follow, especially for humans who have spent their whole lives interacting with one another in just this way. But it makes it very cumbersome to present multiple options for how to answer a given query. Imagine for a moment what it would sound like to read a whole Google search results page aloud, and you’ll understand no one builds a voice interface that way.

That’s why voice assistants tend to answer your question by drawing from a single source of their own choosing. Alexa’s confident response to my kinkajou question, I later discovered, came directly from Wikipedia, which Amazon has apparently chosen as the default source for Alexa’s answers to factual questions. The reasons seem fairly obvious: It’s the world’s most comprehensive encyclopedia, its information is free and public, and it’s already digitized. What it’s not, of course, is infallible. Yet Alexa’s response to my question didn’t begin with the words, “Well, according to Wikipedia … ” She—it—just launched into the answer, as if she (it) knew it off the top of her (its) head. If a human did that, we might call it plagiarism.

The sin here is not merely academic. By not consistently citing the sources of its answers, Alexa makes it difficult to evaluate their credibility. It also implicitly turns Alexa into an information source in its own right, rather than a guide to information sources, because the only entity in which we can place our trust or distrust is Alexa itself. That’s a problem if its information source turns out to be wrong.

The constraints on choice and transparency might not bother people when Alexa’s default source is Wikipedia, NPR, or TuneIn. It starts to get a little more irksome when you ask Alexa to play you music, one of the Echo’s core features. “Alexa, play me the Rolling Stones” will queue up a shuffle playlist of Rolling Stones songs available through Amazon’s own streaming music service, Amazon Prime Music—provided you’re paying the $99 a year required to be an Amazon Prime member. Otherwise, the most you’ll get out of the Echo are 20-second samples of songs available for purchase. Want to guess what one choice you’ll have as to which online retail giant to purchase those songs from?

When you say “Hello” to Alexa, you’re signing up for her party.

Amazon’s response is that Alexa does give you options and cite its sources—in the Alexa app, which keeps a record of your queries and its responses. When the Echo tells you what a kinkajou is, you can open the app on your phone and see a link to the Wikipedia article, as well as an option to search Bing. Amazon adds that Alexa is meant to be an “open platform” that allows anyone to connect to it via an API. The company is also working with specific partners to integrate their services into Alexa’s repertoire. So, for instance, if you don’t want to be limited to playing songs from Amazon Prime Music, you can now take a series of steps to link the Echo to a different streaming music service, such as Spotify Premium. Amazon Prime Music will still be the default, though: You’ll only get Spotify if you specify “from Spotify” in your voice command.

What’s not always clear is how Amazon chooses its defaults and its partners and what motivations might underlie those choices. Ahead of the 2016 Super Bowl, Amazon announced that the Echo could now order you a pizza. But that pizza would come, at least for the time being, from just one pizza-maker: Domino’s. Want a pizza from Little Caesars instead? You’ll have to order it some other way.

To Amazon’s credit, its choice of pizza source is very transparent. To use the pizza feature, you have to utter the specific command, “Alexa, open Domino’s and place my Easy Order.” The clunkiness of that command is no accident. It’s Amazon’s way of making sure that you don’t order a pizza by accident and that you know where that pizza is coming from. But it’s unlikely Domino’s would have gone to the trouble of partnering with Amazon if it didn’t think it would result in at least some number of people ordering Domino’s for their Super Bowl parties rather than Little Caesars.

None of this is to say that Amazon and Domino’s are going to conspire to monopolize the pizza industry anytime soon. There are obviously plenty of ways to order a pizza besides doing it on an Echo. Ditto for listening to the news, the Rolling Stones, a book, or a podcast. But what about when only one company’s smart thermostat can be operated by Alexa? If you come to rely on Alexa to manage your Google Calendar, what happens when Amazon and Google have a falling out?

When you say “Hello” to Alexa, you’re signing up for her party. Nominally, everyone’s invited. But Amazon has the power to ensure that its friends and business associates are the first people you meet.

* * *

Business Insider, William Wei

Business Insider, William Wei

These concerns might sound rather distant—we’re just talking about niche speakers connected to niche thermostats, right? The coming sea change feels a lot closer once you think about the other companies competing to make digital assistants your main portal to everything you do on your computer, in your car, and on your phone. Companies like Google.

Google may be positioned best of all to capitalize on the rise of personal A.I. It also has the most to lose. From the start, the company has built its business around its search engine’s status as a portal to information and services. Google Now—which does things like proactively checking the traffic and alerting you when you need to leave for a flight, even when you didn’t ask it to—is a natural extension of the company’s strategy.

If something is going to replace Google’s on-screen services, Google wants to be the one that does it.

As early as 2009, Google began to work on voice search and what it calls “conversational search,” using speech recognition and natural language understanding to respond to questions phrased in plain language. More recently, it has begun to combine that with “contextual search.” For instance, as Google demonstrated at its 2015 developer conference, if you’re listening to Skrillex on your Android phone, you can now simply ask, “What’s his real name?” and Google will intuit that you’re asking about the artist. “Sonny John Moore,” it will tell you, without ever leaving the Spotify app.

It’s no surprise, then, that Google is rumored to be working on two major new products—an A.I.-powered messaging app or agent and a voice-powered household gadget—that sound a lot like Facebook M and the Amazon Echo, respectively. If something is going to replace Google’s on-screen services, Google wants to be the one that does it.

So far, Google has made what seems to be a sincere effort to win the A.I. assistant race without

sacrificing the virtues—credibility, transparency, objectivity—that made its search page such a dominant force on the Web. (It’s worth recalling: A big reason Google vanquished AltaVista was that it didn’t bend its search results to its own vested interests.) Google’s voice search does generally cite its sources. And it remains primarily a portal to other sources of information, rather than a platform that pulls in content from elsewhere. The downside to that relatively open approach is that when you say “hello” to Google voice search, it doesn’t say hello back. It gives you a link to the Adele song “Hello.” Even then, Google isn’t above playing favorites with the sources of information it surfaces first: That link goes not to Spotify, Apple Music, or Amazon Prime Music, but to YouTube, which Google owns. The company has weathered antitrust scrutiny over allegations that this amounted to preferential treatment. Google’s defense was that it puts its own services and information sources first because its users prefer them.

* * *

YouTube

YouTube

If there’s a consolation for those concerned that intelligent assistants are going to take over the world, it’s this: They really aren’t all that intelligent. Not yet, anyway.

The 2013 movie Her, in which a mobile operating system gets to know its user so well that they become romantically involved, paints a vivid picture of what the world might look like if we had the technology to carry Siri, Alexa, and the like to their logical conclusion. The experts I talked to, who are building that technology today, almost all cited Her as a reference point—while pointing out that we’re not going to get there anytime soon.

Google recently rekindled hopes—and fears—of super-intelligent A.I. when its AlphaGo software defeated the world champion in a historic Go match. As momentous as the achievement was, designing an algorithm to win even the most complex board game is trivial compared with designing one that can understand and respond appropriately to anything a person might say. That’s why, even as artificial intelligence is learning to recommend songs that sound like they were hand-picked by your best friend or navigate city streets more safely than any human driver, A.I. still has to resort to parlor tricks—like posing as a 13-year-old struggling with a foreign language—to pass as human in an extended conversation. The world is simply too vast, language too ambiguous, the human brain too complex for any machine to model it, at least for the foreseeable future.

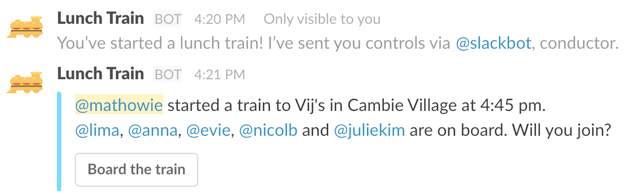

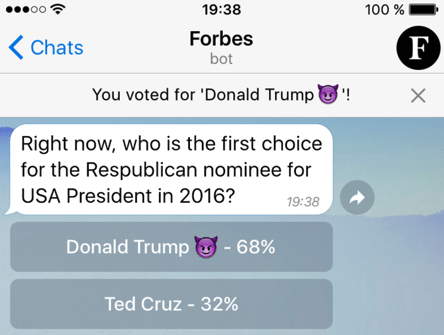

But if we won’t see a true full-service A.I. in our lifetime, we might yet witness the rise of a system that can approximate some of its capabilities—comprising not a single, humanlike Her, but a million tiny hims carrying out small, discrete tasks handily. In January, the Verge’s Casey Newton made a compelling argument that our technological future will be filled not with websites, apps, or even voice assistants, but with conversational messaging bots. Like voice assistants, these bots rely on natural language understanding to carry on conversations with us. But they will do so via the medium that has come to dominate online interpersonal interaction, especially among the young people who are the heaviest users of mobile devices: text messaging. For example, Newton points to “Lunch Bot,” a relatively simple agent that lived in the wildly popular workplace chat program Slack and existed for a single, highly specialized purpose: to recommend the best place for employees to order their lunch from on a given day. It soon grew into a venture-backed company called Howdy.

A world of conversational machines is one in which we treat software like humans, letting them deeper into our lives and confiding in them more than ever.

I have a bot in my own life that serves a similarly specialized yet important role. While researching this story, I ran across a company called X.ai whose mission is to build the ultimate virtual scheduling assistant. It’s called Amy Ingram, and if its initials don’t tip you off, you might interact with it several times before realizing it’s not a person. (Unlike some other intelligent assistant companies, X.ai gives you the option to choose a male name for your assistant instead: Mine is Andrew Ingram.) Though it’s backed by some impressive natural language tech, X.ai’s bot does not attempt to be a know-it-all or do-it-all; it doesn’t tell jokes, and you wouldn’t want to date him. It asks for access to just one thing—your calendar. And it communicates solely by email. Just cc it on any thread in which you’re trying to schedule a meeting or appointment, and it will automatically step in and take over the back-and-forth involved in nailing down a time and place. Once it has agreed on a time with whomever you’re meeting—or, perhaps, with his or her own assistant, whether human or virtual—it will put all the relevant details on your calendar. Have your A.I. cc my A.I.

For these bots, the key to success is not growing so intelligent that they can do everything. It’s staying specialized enough that they don’t have to.

“We’ve had this A.I. fantasy for almost 60 years now,” says Dennis Mortensen, X.ai’s founder and CEO. “At every turn we thought the only outcome would be some human-level entity where we could converse with it like you and I are [conversing] right now. That’s going to continue to be a fantasy. I can’t see it in my lifetime or even my kids’ lifetime.” What is possible, Mortensen says, is “extremely specialized, verticalized A.I.s that understand perhaps only one job, but do that job very well.”

Yet those simple bots, Mortensen believes, could one day add up to something more. “You get enough of these agents, and maybe one morning in 2045 you look around and that plethora—tens of thousands of little agents—once they start to talk to each other, it might not look so different from that A.I. fantasy we’ve had.”

That might feel a little less scary. But it still leaves problems of transparency, privacy, objectivity, and trust—questions that are not new to the world of personal technology and the Internet but are resurfacing in fresh and urgent forms. A world of conversational machines is one in which we treat software like humans, letting them deeper into our lives and confiding in them more than ever. It’s one in which the world’s largest corporations know more about us, hold greater influence over our choices, and make more decisions for us than ever before. And it all starts with a friendly “Hello.”

www.businessinsider.com/ai-assistants-are-taking-over-2016-4