It’s not just about robots. These seven other technologies will transform the future of work.

Advances in robotics and artificial intelligence aren’t the only tech trends reshaping the future of work. Rather, they are among the most visible of a confluence of powerful overlapping developments that strengthen, reinforce, and accelerate each other. The combination of these forces has led analysts to speak of a new era in the evolution of the global economy. Below, a primer on seven other new technologies driving that transition:

Digitization

One of the most remarkable and durable predictions about the pace of technological change in the modern era is Moore’s Law, the observation that the number of transistors on an integrated circuit doubles approximately every one or two years. Moore’s Law gets its name from Gordon Moore, co-founder of Intel, who first articulated the idea in 1965. Initially, Moore projected the number of transistors packed onto a silicon wafer to double annually for another decade. In 1975, he revised his estimate to doubling every two years and guessed it might hold a decade longer. In fact, Moore’s rule of thumb has held true for more than five decades and is used to guide long-term planning throughout the industrialized world. The latest Intel processor contains about 1.75 billion transistors compared to half a million compared to 2,300 transistors on the first microchip Intel sold commercially back in 1971.

Many experts think the physics of metal oxide technology will make it impractical to shrink transistors after around 2020. But even at a slower rate, the implications of such extraordinary gains in our ability to process and store data are far-reaching. If the invention of the microchip was the key technological breakthrough that unleashed the “Third Industrial Revolution”—destroying jobs in a slew of sectors including media, retail, financial, and legal services—unrelenting exponential advances in computing power have facilitated other profound new technological developments that now define the Fourth Industrial Revolution.

The Internet of Things

Smaller, faster transistors have made it possible for us to embed sensors and actuators in almost every imaginable object—not just computers, but also machines, hand-held gadgets, home appliances, cars, roads, product packaging, clothing, even humans themselves. Advances in mobile and wireless technologies have made it possible for all those “things” to exchange data with each other creating, in effect, an “Internet of Things.” This network of digitally enabled things has grown at such a staggering pace that, in data terms, it dwarfs the Internet that we use to connect with each other. Cisco predicts that by 2020, the number of connected things will exceed 50 billion—the equivalent of six objects for every human on the planet.

The real significance of the Internet of Things lies not in the profusion of data-gathering sensors but in the fact that these sensors can be connected, and that we can evaluate and act on the data collected via this new digital infrastructure in real time no matter what source it comes from or form it assumes. Suddenly every aspect of our lives can be made “smart.”

Big Data

Being able to collect loads of data and knowing how to analyze and interpret it are very different propositions. Today, more data crosses the Internet every second than were stored in the entire Internet just 20 years ago. Large companies generate data in petabytes—a quadrillion bytes, or the equivalent of 20 million filing cabinets worth of text. Gartner, a technology consultancy, definesBig Data in terms of “three Vs”: volume, velocity, and variety.

As the Economist put it, “Today we have more information than ever. But the importance of all that information extends beyond simply being able to do more, or know more, than we already do. The quantitative shift leads to a qualitative shift. Having more data allows us to do new things that weren’t possible before. In other words: More is not just more. More is new. More is better. More is different.”

Big Data will not only provide valuable new insights into consumer behavior, but will also change the way we work in all sorts of ways. It could change the hiring process, for example, and many employers are already using sensors and software to monitor employee performance and, indeed, their every move. In theory, Big Data can also work the other way, enabling prospective employees to ferret out employers who treat their workers badly. But my guess, for what it’s worth, is that Big Data will help tilt the balance of power decisively in favor of companies at the expense of workers.

Cloud Computing

What we have come to call “the cloud” is made up of networks of data centers that deliver services over the Internet. Unlike stand-alone computers, whose performance depends on the speed of their processor chips, computers connected to the cloud can be made more powerful without changes to their hardware. TheEconomist has called the shift to the cloud “the biggest upheaval in the IT industry since smaller, networked machines dethroned mainframe computers in the early 1990s.”

This shift will only accelerate as Moore’s Law comes to an end. Firms will upgrade their own computers and servers less often and rely instead on continuous improvement of services by cloud providers.

The clear leader in cloud computing is Amazon, which launched a separate cloud business, Amazon Web Services, in 2006. Today AWS boasts more than a million customers and offers a myriad of different services including encryption, data storage and machine learning. Other players include Google, Microsoft, Alibaba, Baidu and Tencent. These firms look well-positioned to disrupt traditional sellers of hardware and software. For small businesses, meanwhile, being able to purchase computer power, storage capacity, and applications as needed from the cloud will help lower costs, boost efficiency, and make it easier to deliver results quickly.

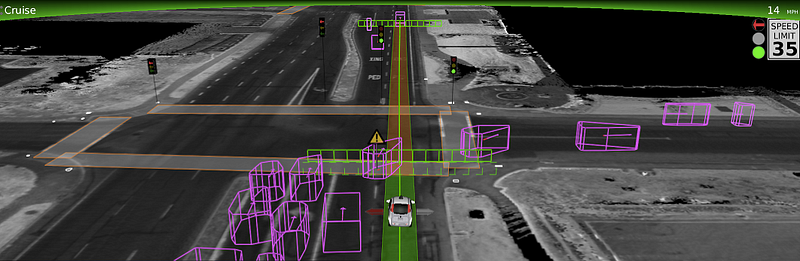

Self-driving Vehicles

Google surprised everyone with its 2010 announcement that it had developed a fleet of seven “self-piloting” Toyota Prius Hybrids capable of navigating public roadways and sensing and reacting to changes in the environment around it. Today, the idea of “autonomous vehicles” no longer feels like sci-fi fantasy. Audi, BMW, GM, Nissan, Toyota, and Volvo have all announced plans to unveil autonomous vehicles by 2020. Some experts estimate that by that year there could be as many as 10 million self-driving vehicles on the road.

The death of a Tesla driver using “autopilot” technology this past May marked the first fatality for self-driving cars and has raised questions about the safety of autonomous vehicles and, at the very least, highlighted the need for a new legal framework to sort out questions of liability. Still, governments have strong incentives to encourage the adoption of self-driving vehicles because of their potential to ease urban congestion and drastically reduce public speeding on roads, highways, and parking places. KPMG predicts all the technological and regulatory components necessary for widespread adoption of autonomous vehicles could fall into place as early as 2025. The employment implications of that shift are huge: according to data from the U.S. Census Bureau, truck, delivery, or tractor driver is the most common occupational category in 29 of the 50 American states.

The Platform Economy

The widespread use of autonomous vehicles will have an even greater impact when paired with services like Uber and Lyft, which create online platforms for independent workers to contract out specific services to individual customers. KPMG estimates that combining autonomous vehicles in Uber or Lyft-like arrangements could reduce the number of cars in operation by as much as 90 percent.

The power of online marketplaces is not limited to the transportation sector. Online task brokers like TaskRabbit, Fivver, USource, and Amazon’s Mechanical Turk have given rise to a new model of work that has been called the “gig economy,” the “platform economy,” or “sharing economy.” Such platforms create a new marketplace for work by unbundling jobs into discrete tasks and connecting sellers directly with consumers. They make it possible to exchange not just services, but also assets and physical goods, as in the case of Airbnb, eBay, and Alibaba.

A recent study by the JPMorgan Chase Institute found that, as of September 2015, nearly 1 percent of U.S. adults earned income in via gig economy—up from just 0.1 percent of adults in 2012.

Many experts extol the virtues of the gig economy, pointing to the gig workers’ freedom to choose their hours and work from home. But these more flexible arrangements have a dark side. In many economies, particularly the U.S., employers shoulder the burden of providing health insurance, compensation for injury on the job, and retirement benefits. Freelancers have to take care of all those things on their own. While some highly talented stars will thrive as independent contractors, on balance, the gig economy, like advances in robotics, AI, and Big Data, gives employers the upper hand.

3D Printing

3D printing, sometimes called “additive manufacturing,” is often mentioned among the technologies that will change the way we work. Proponents predict that in the not-too-distant future, 3D printers will be able to manufacture everything from auto parts to shoes to human organs. Some think 3D printing will lead to wholesale “restoring” of manufacturing from low-wage economies like China back to advanced economies in the West, and might ultimately eliminate millions of manufacturing jobs.

But the range of products that can be produced cost-effectively with 3D printers remains relatively limited. 3D parts aren’t as strong as traditionally manufactured parts. Generally speaking you can only print in plastic, and the plastic required for 3D printing is expensive—meaning that it makes little sense to use the technology to produce large items on a mass scale. Programming and computer modeling necessary to print unique items is time-consuming and expensive. Count me among the skeptics. Still, even if the impact falls short of the rhetoric, 3D printing is another new technology that seems more likely to eliminate jobs than create new ones.