For weeks, a small team of security researchers and developers have been putting the finishing touches on a new privacy app, which its founder says can nix some of the hidden threats that mobile users face — often without realizing.

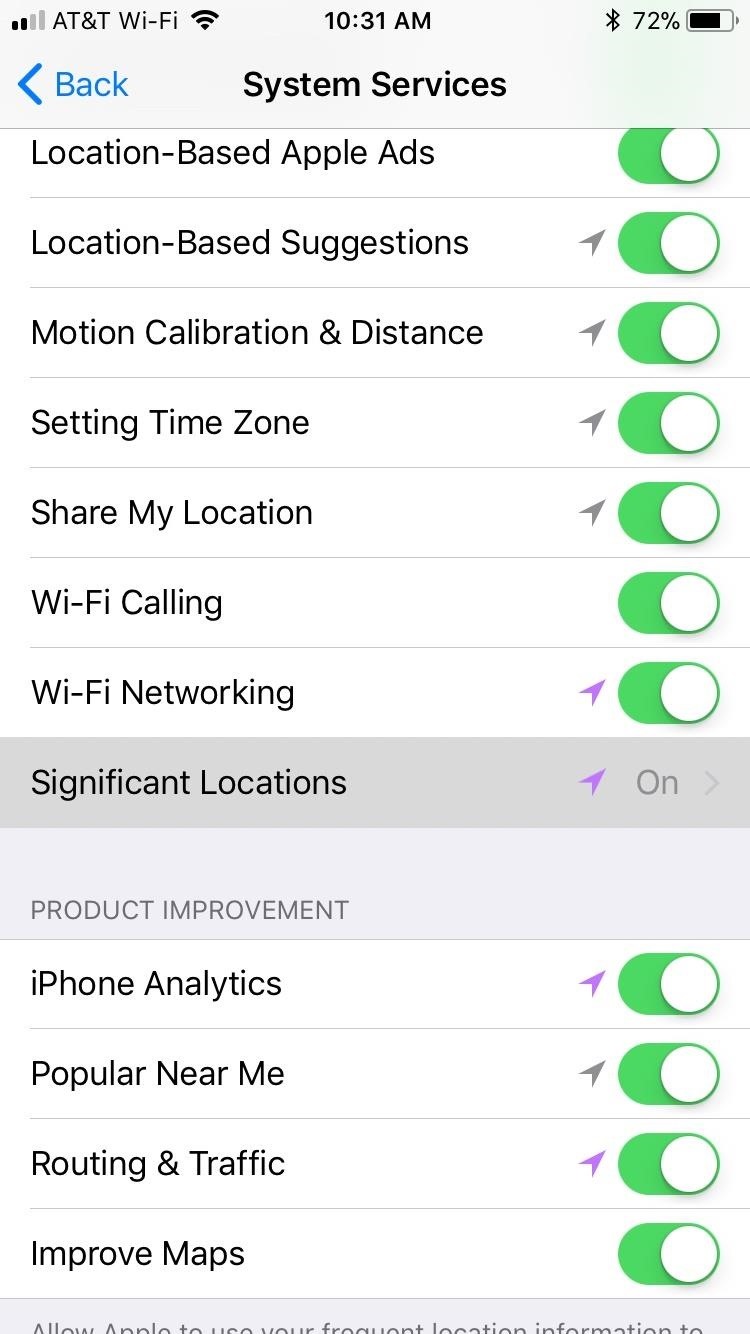

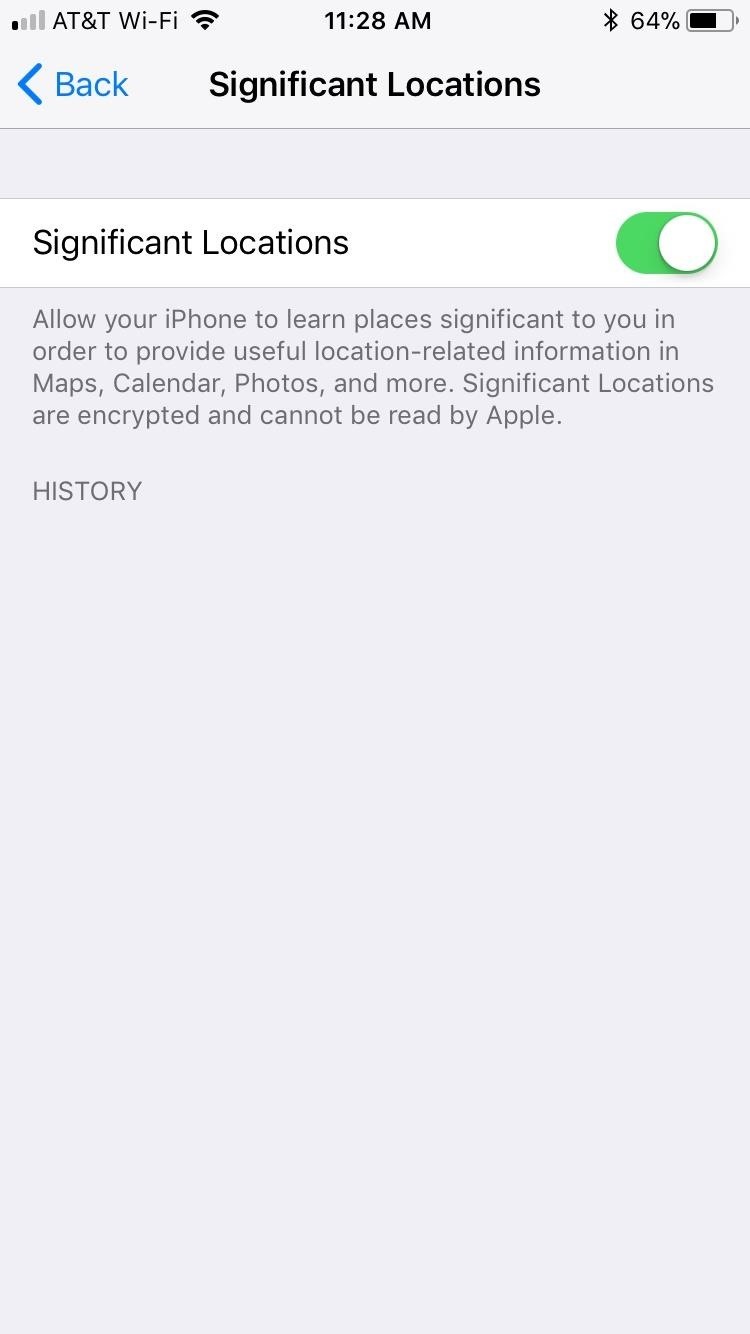

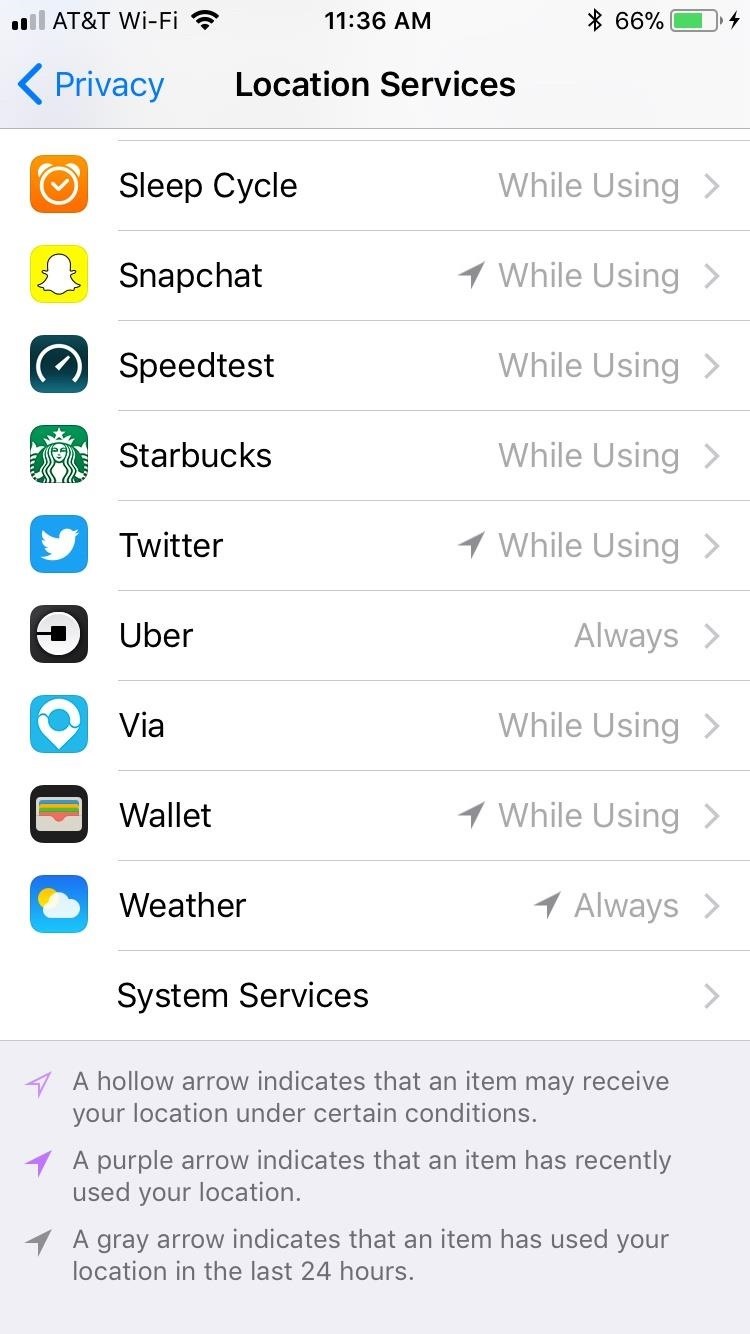

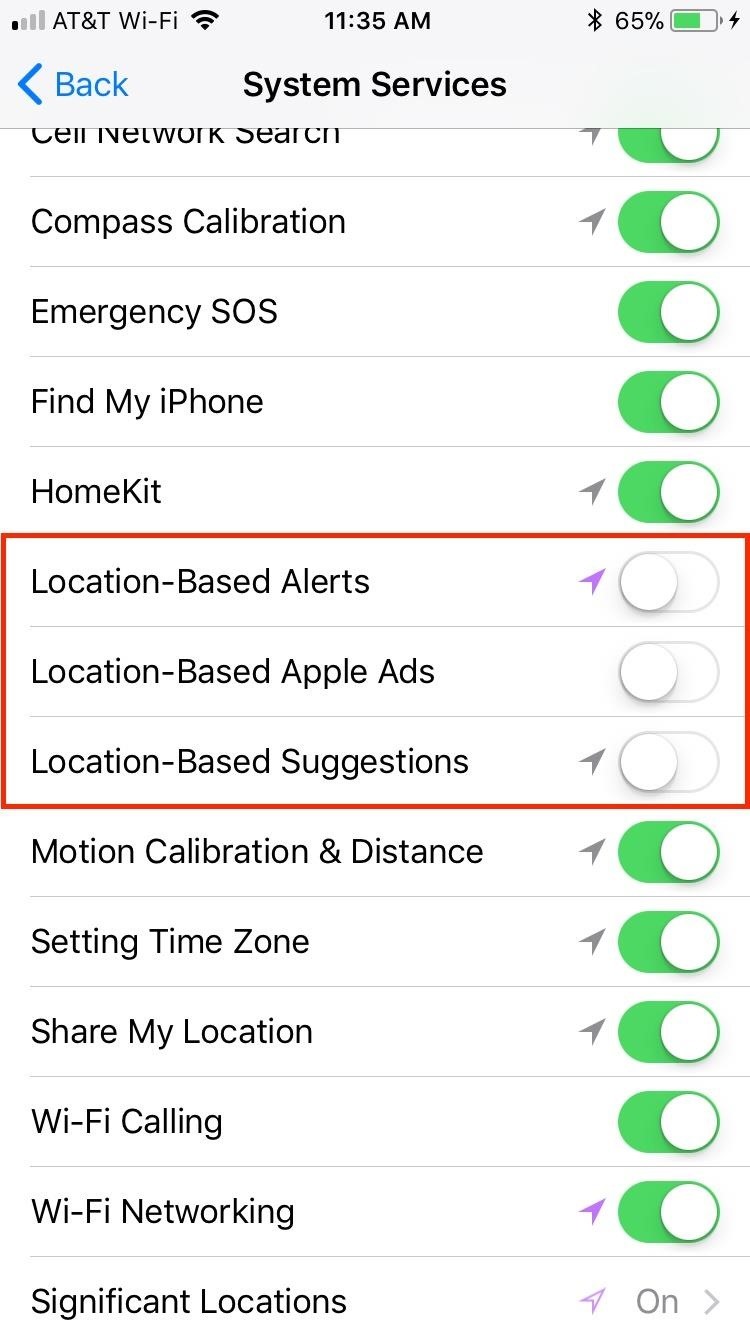

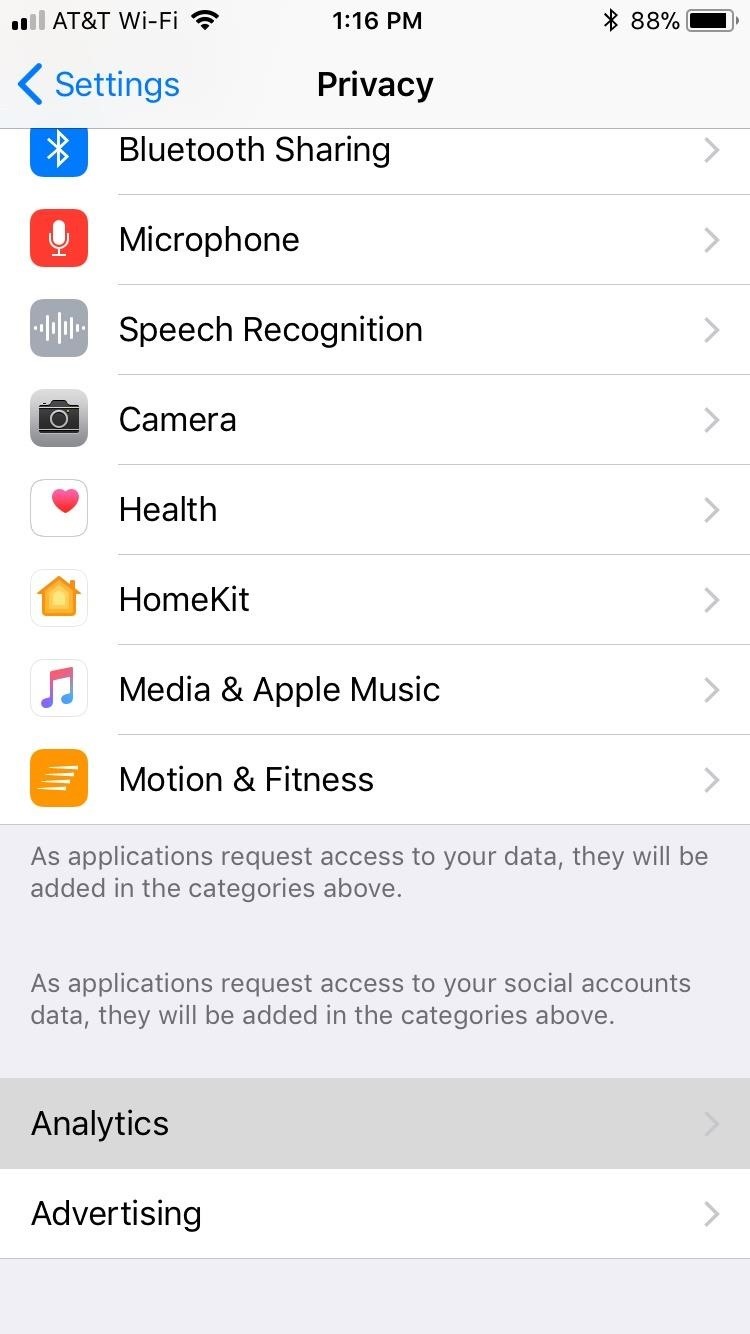

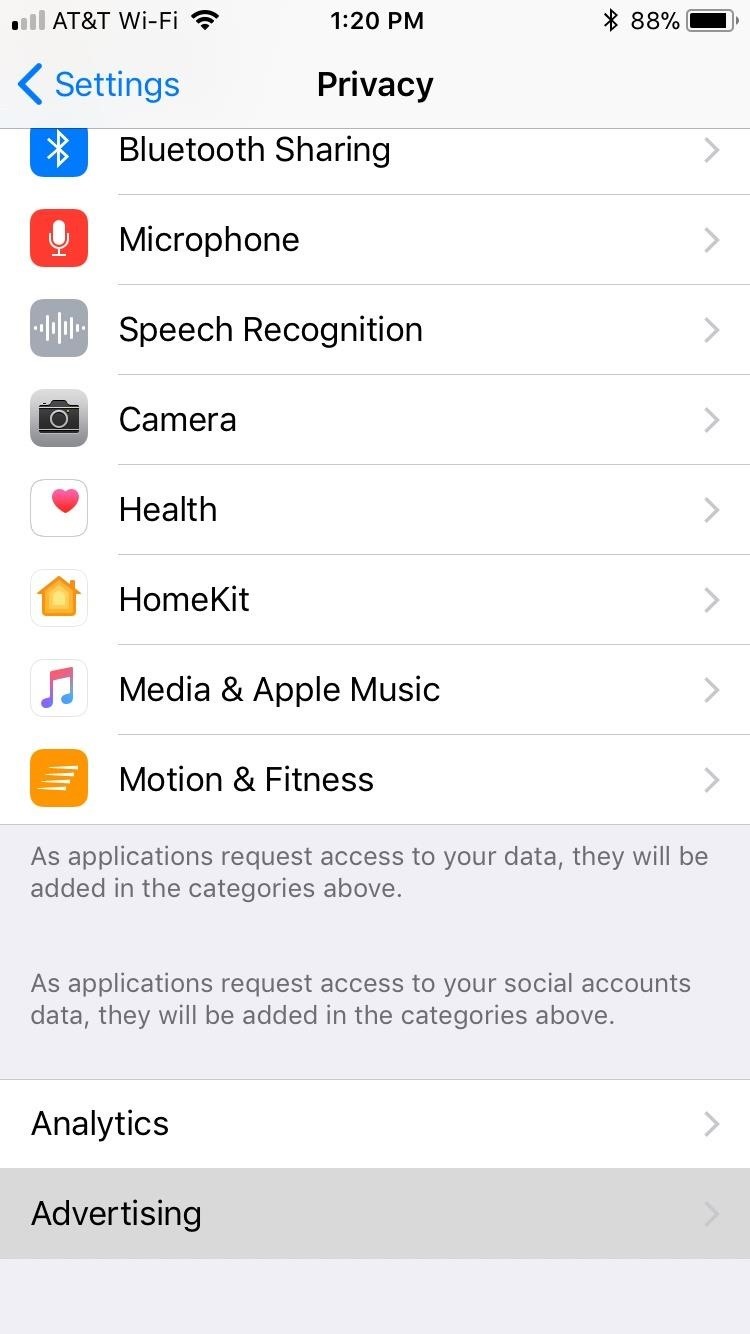

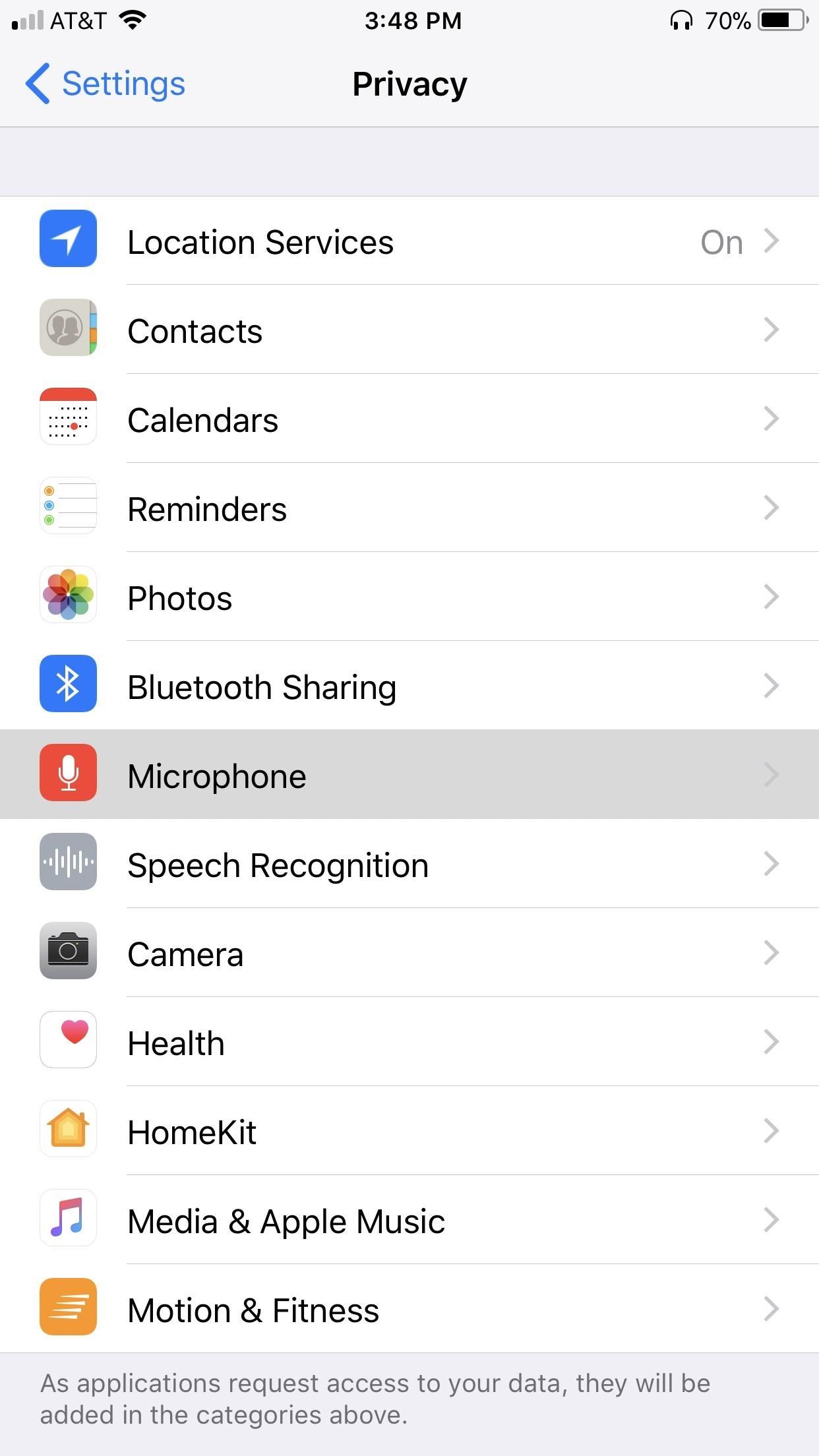

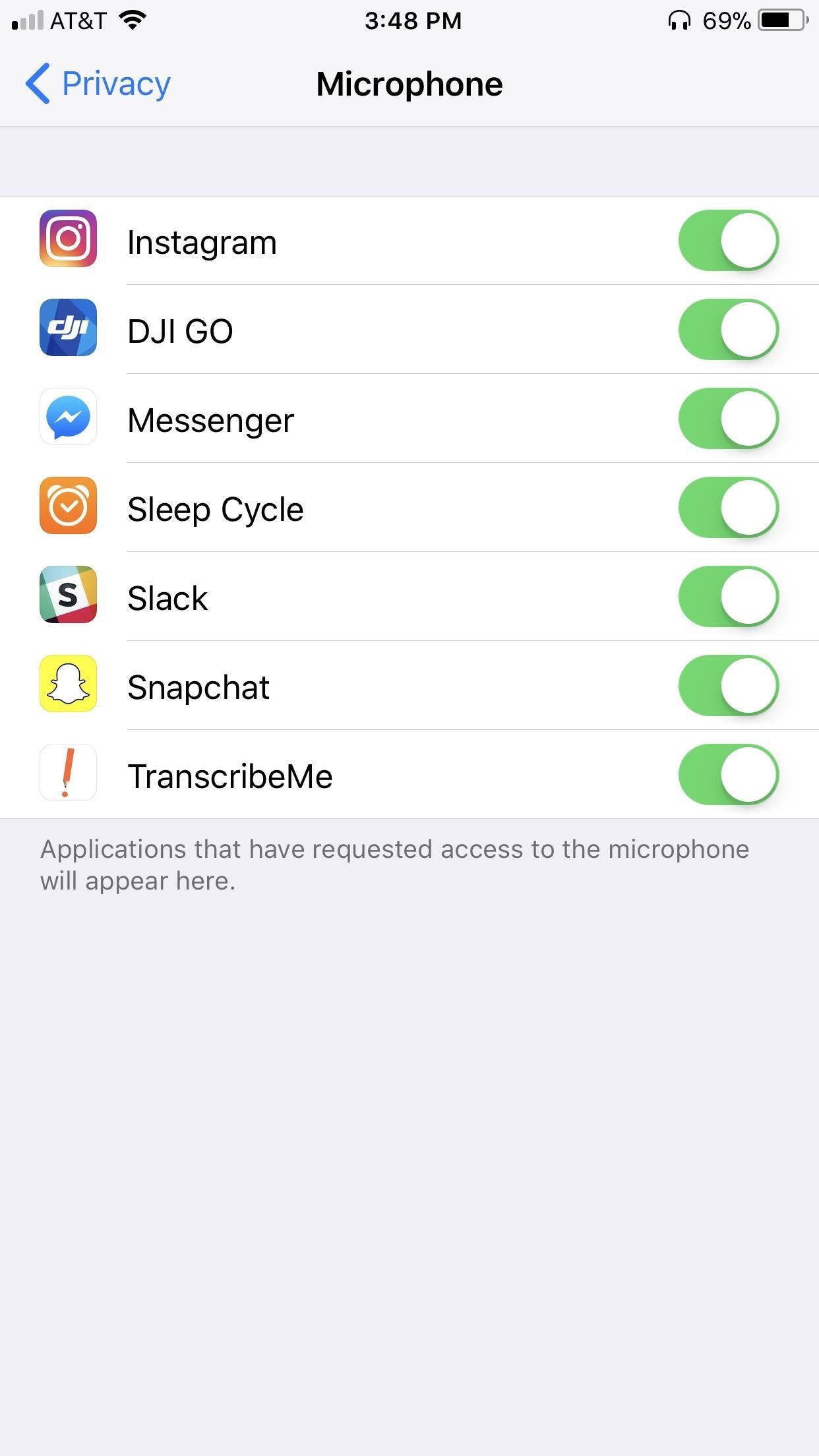

Phones track your location, apps siphon off our data, and aggressive ads try to grab your attention. Your phone has long been a beacon of data, broadcasting to ad networks and data trackers, trying to build up profiles on you wherever you go to sell you things you’ll never want.

Will Strafach knows that all too well. A security researcher and former iPhone jailbreaker, Strafach has shifted his time digging into apps for insecure, suspicious and unethical behavior. Last year, he found AccuWeather was secretly sending precise location data without a user’s permission. And just a few months ago, he revealed a list of dozens of apps that were sneakily siphoning off their users’ tracking data to data monetization firms without their users’ explicit consent.

Now his team — including co-founder Joshua Hill and chief operating officer Chirayu Patel — will soon bake those findings into its new “smart firewall” app, which he says will filter and block traffic that invades a user’s privacy.

“We’re in a ‘wild west’ of data collection,” he said, “where data is flying out from your phone under the radar — not because people don’t care but there’s no real visibility and people don’t know it’s happening,” he told me in a call last week.

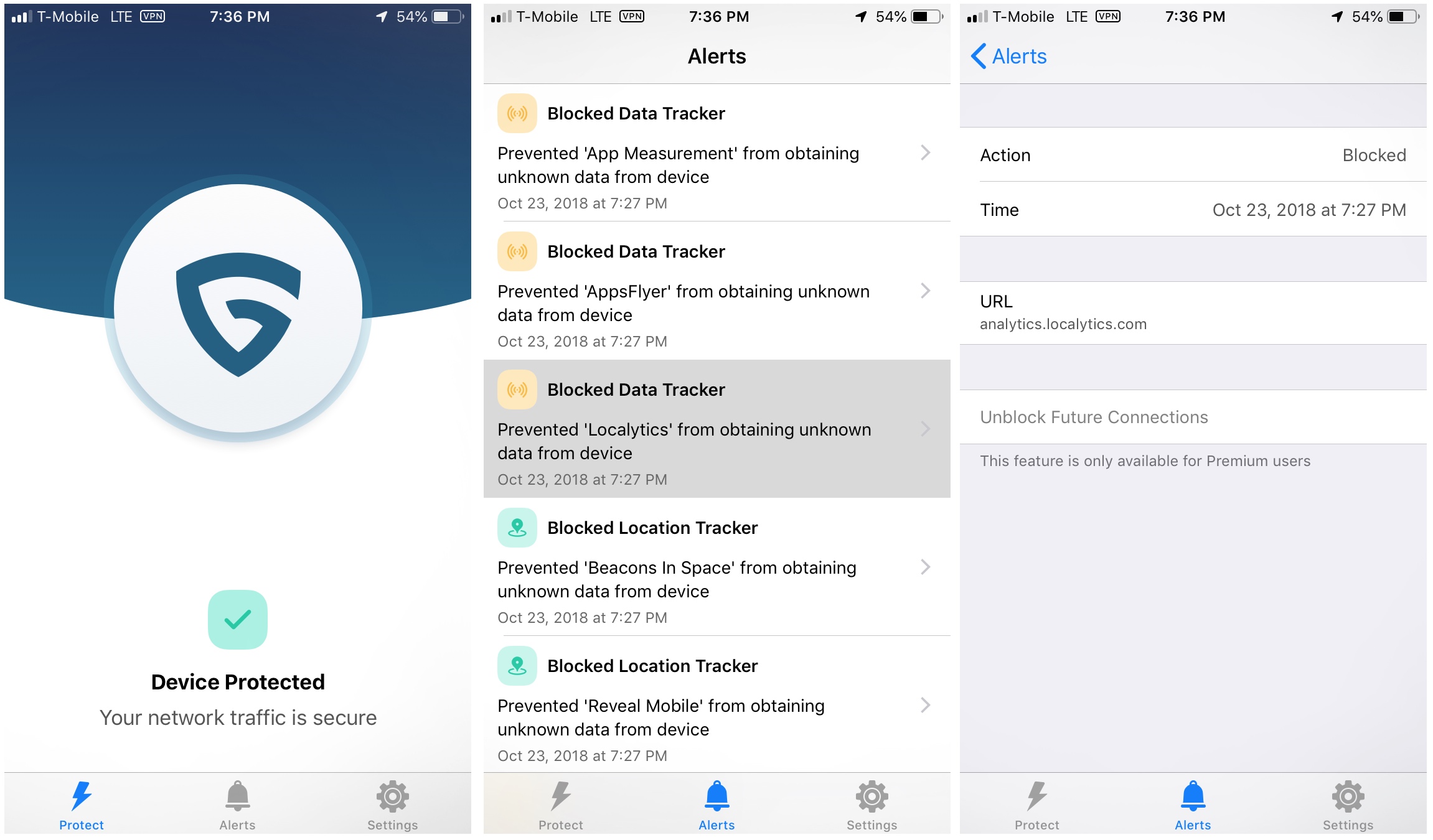

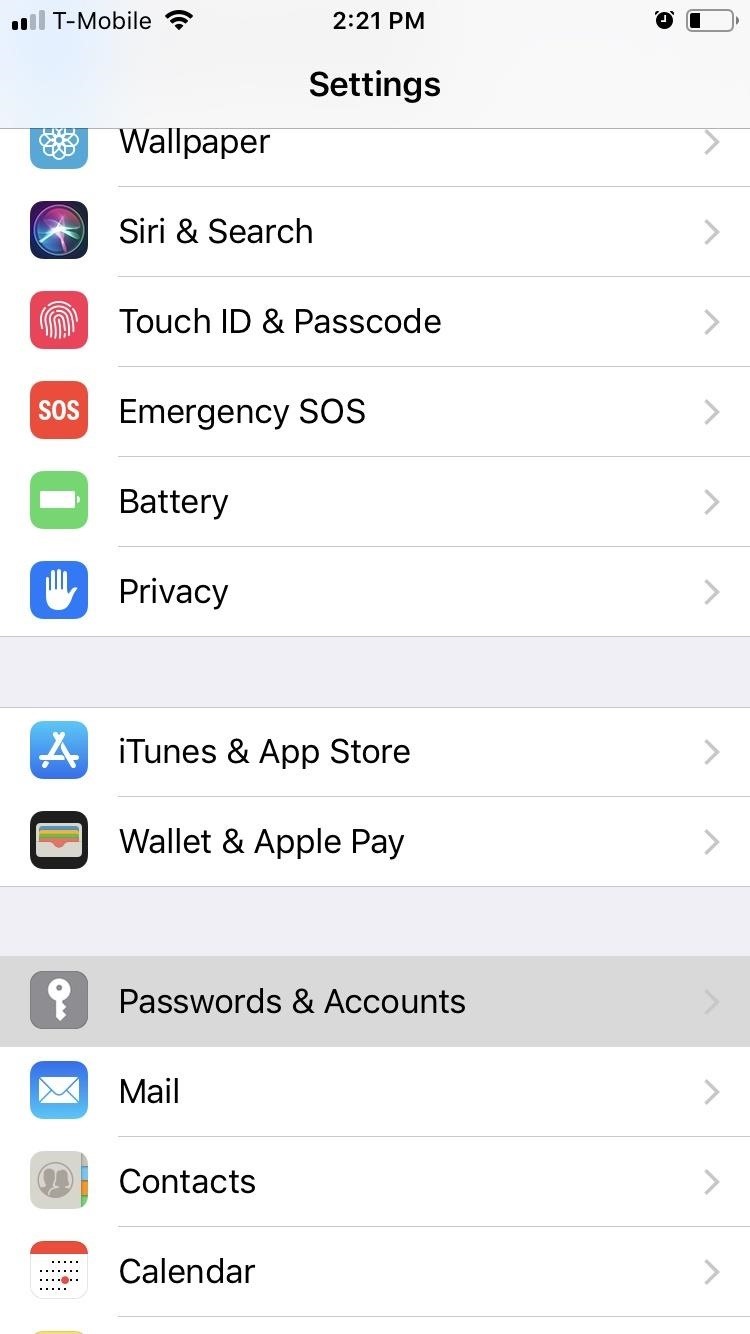

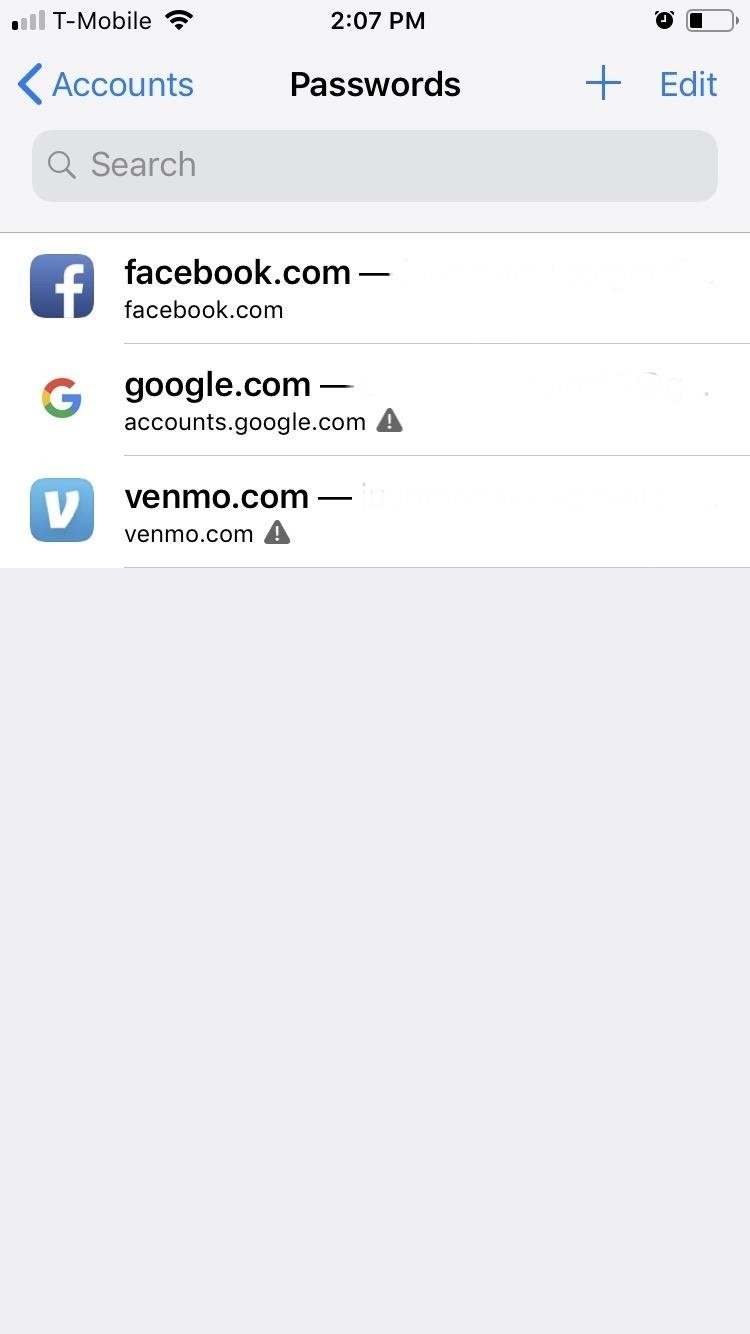

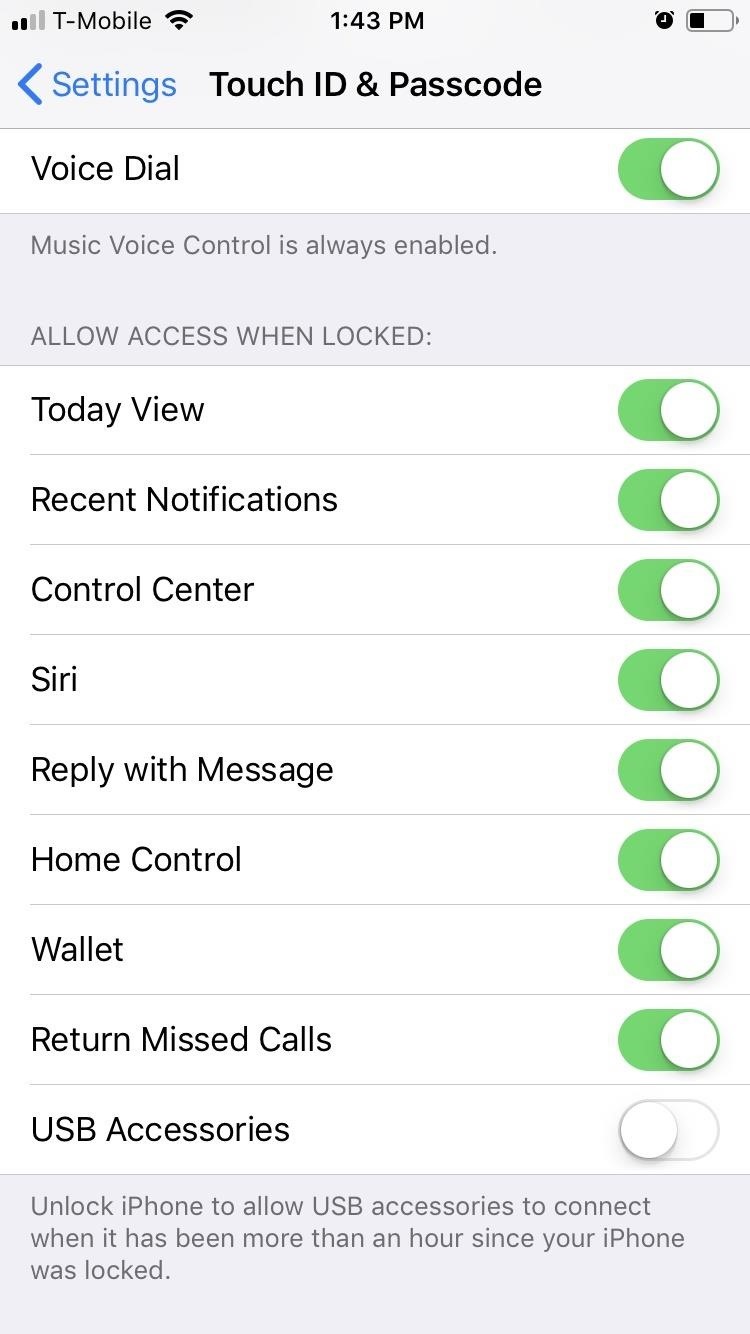

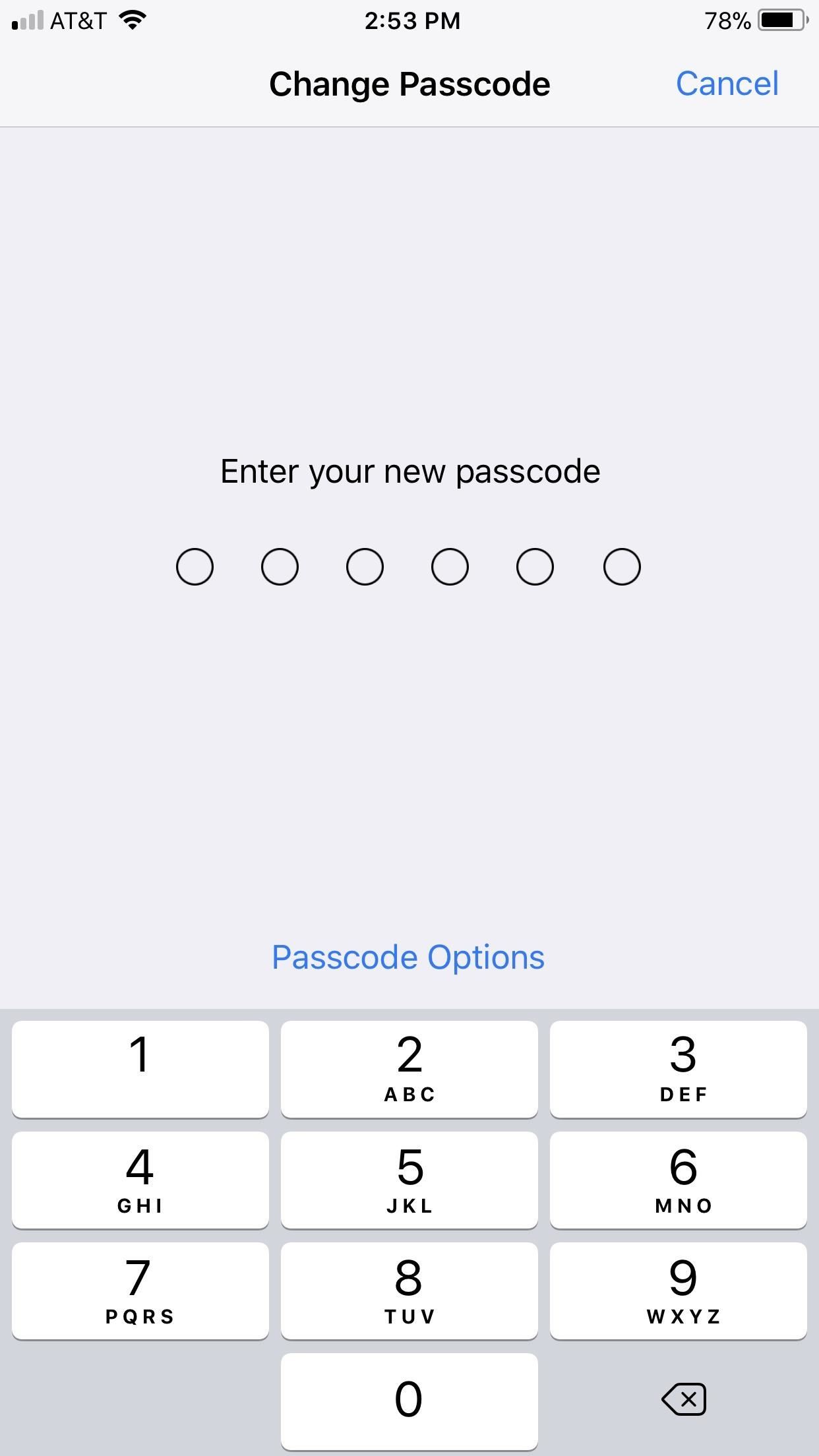

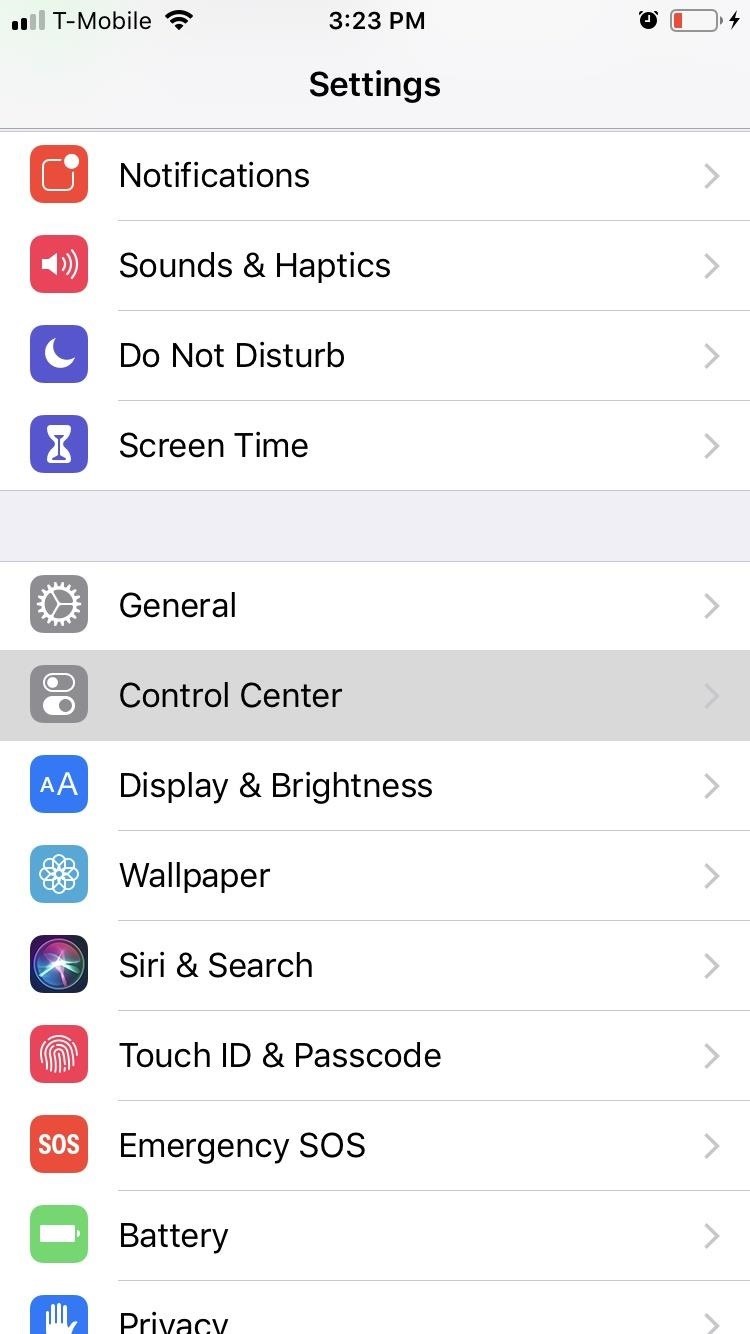

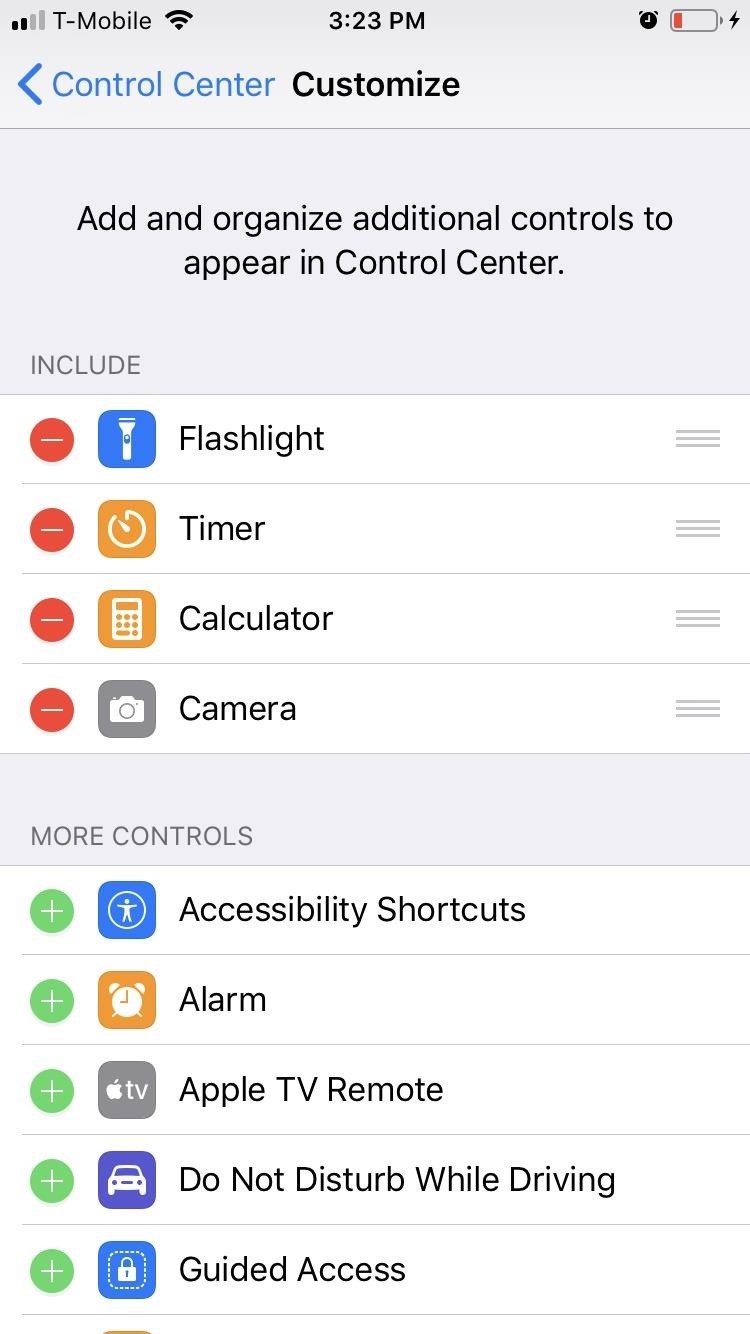

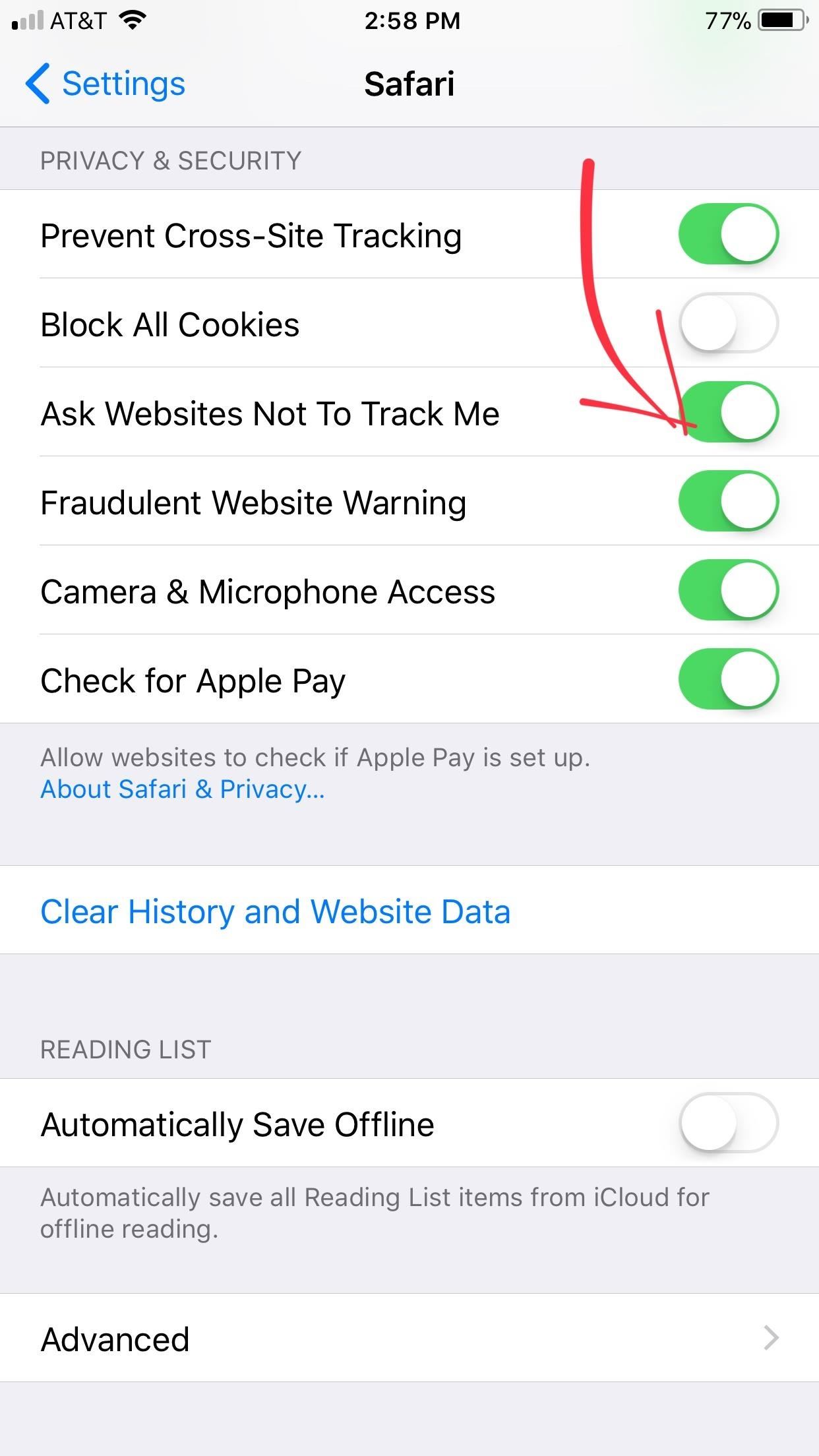

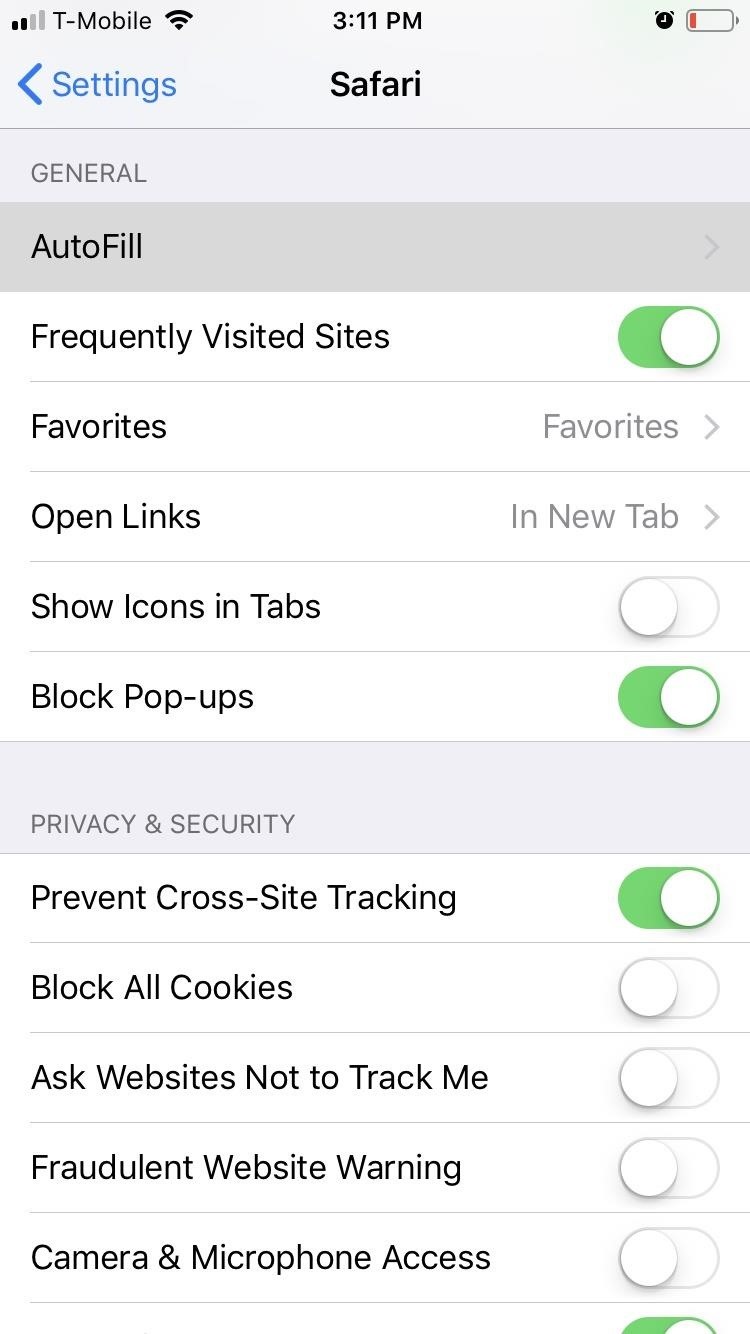

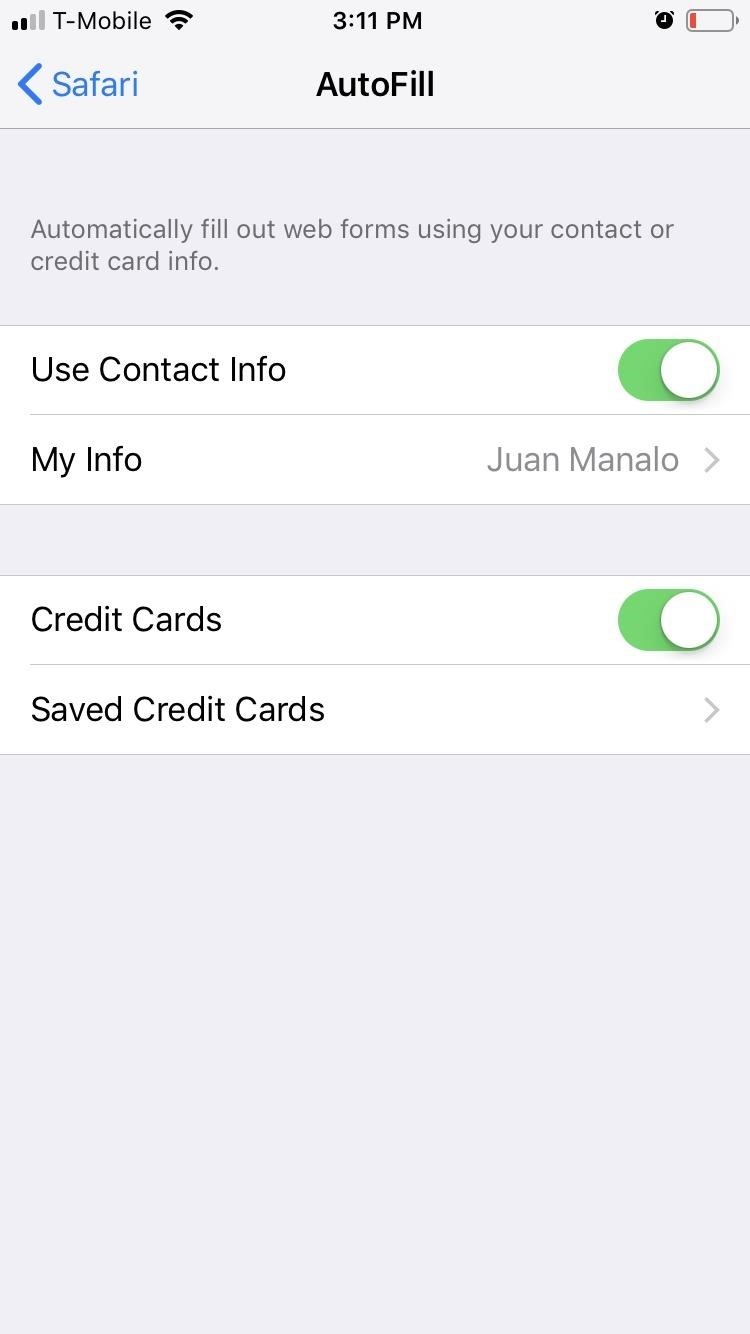

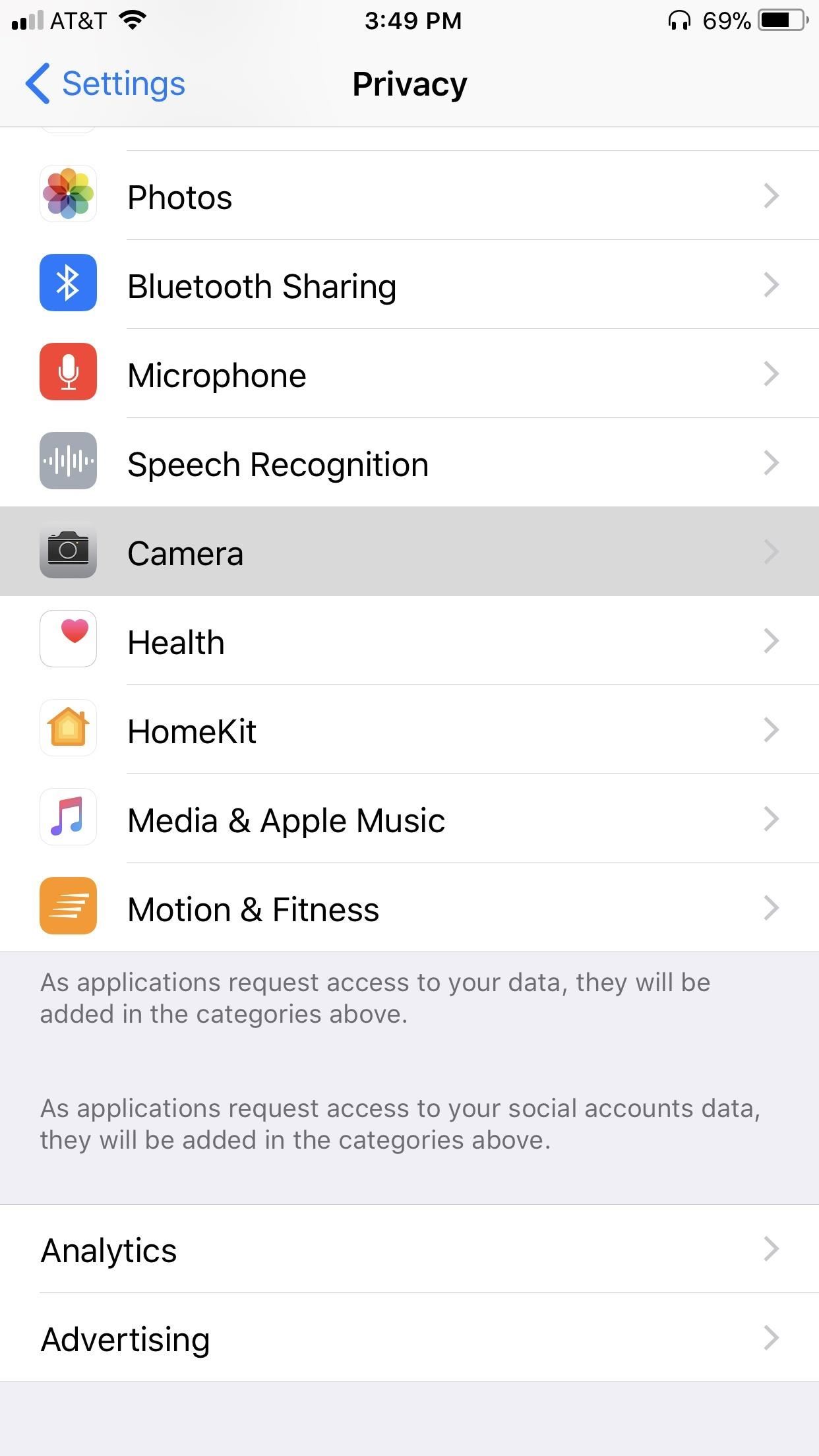

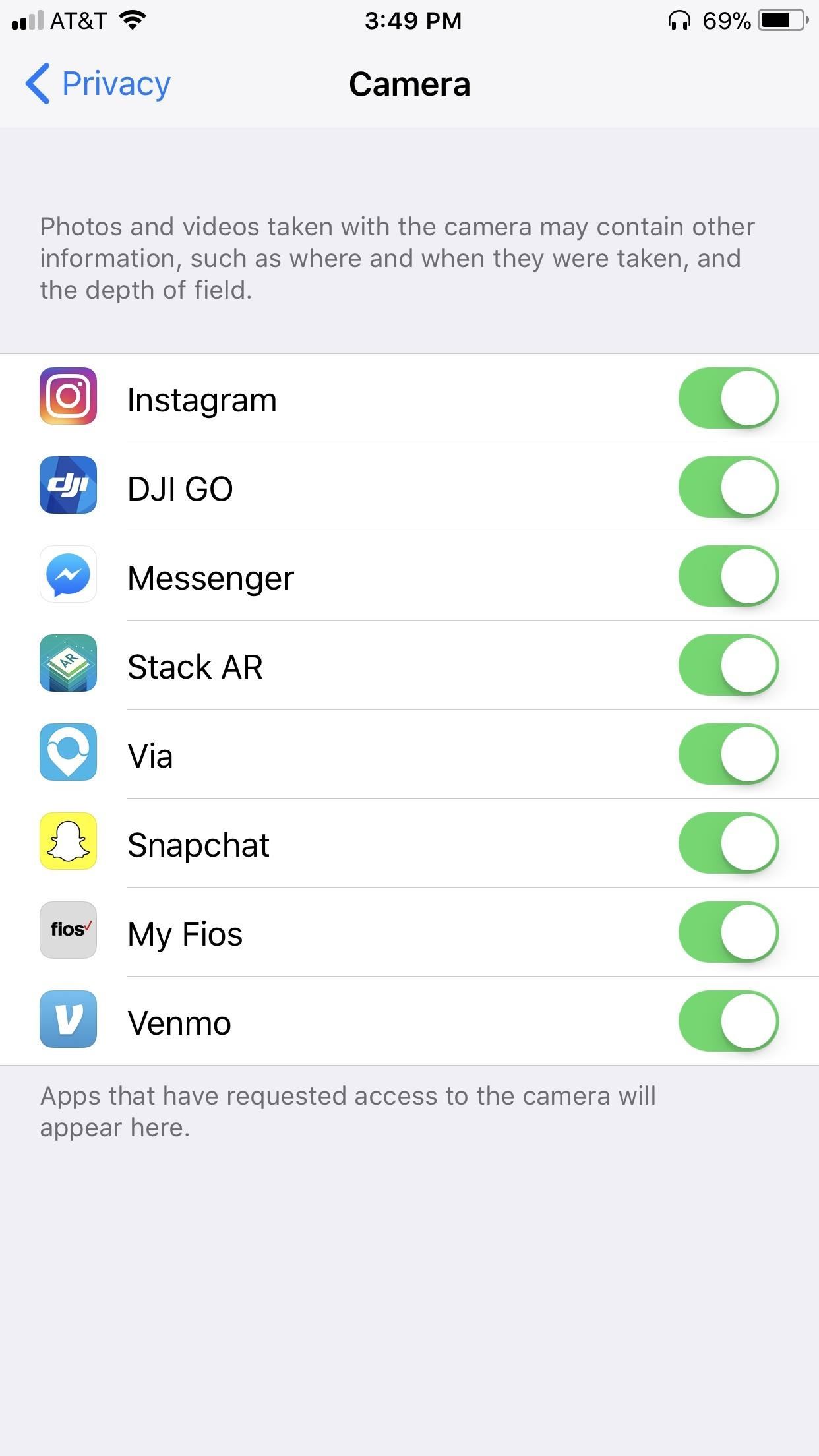

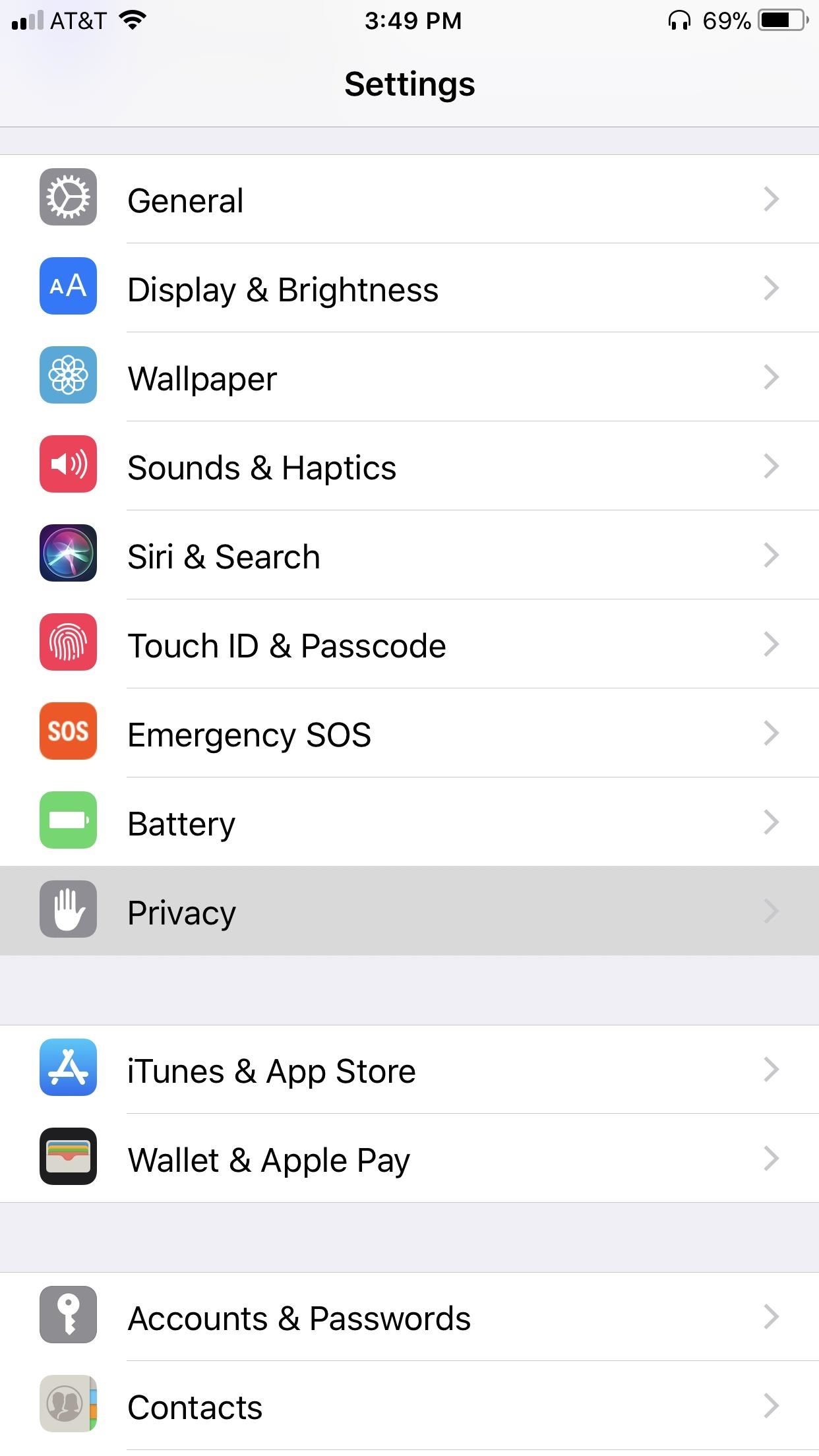

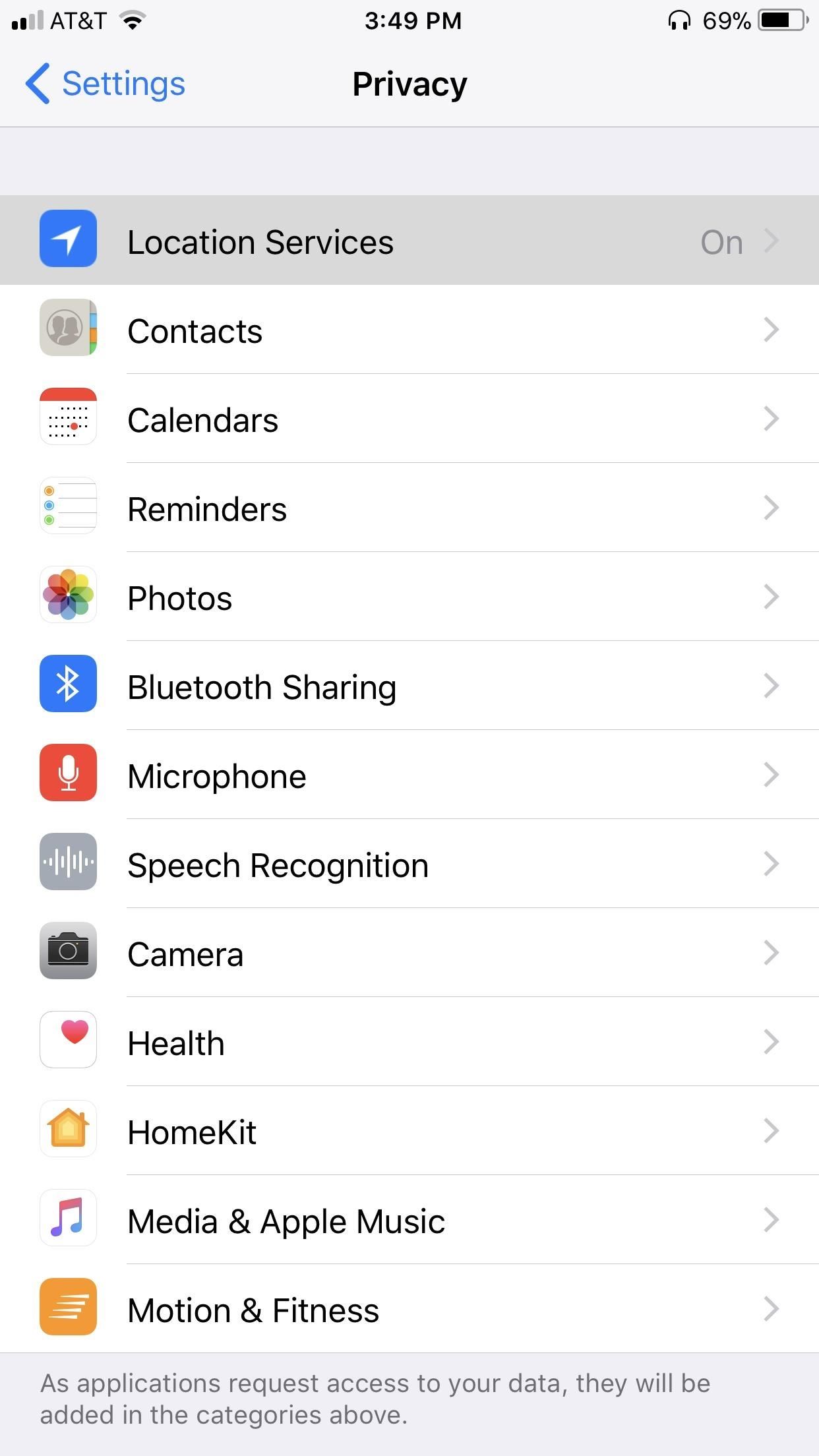

At its heart, the Guardian Mobile Firewall — currently in a closed beta — funnels all of an iPhone or iPad’s internet traffic through an encrypted virtual private network (VPN) tunnel to Guardian’s servers, outsourcing all of the filtering and enforcement to the cloud to help reduce performance issues on the device’s battery. It means the Guardian app can near-instantly spot if another app is secretly sending a device’s tracking data to a tracking firm, warning the user or giving the option to stop it in its tracks. The aim isn’t to prevent a potentially dodgy app from working properly, but to give users’ awareness and choice over what data leaves their device.

Strafach described the app as “like a junk email filter for your web traffic,” and you can see from of the app’s dedicated tabs what data gets blocked and why. A future version plans to allow users to modify or block their precise geolocation from being sent to certain servers. Strafach said the app will later tell a user how many times an app accesses device data, like their contact lists.

But unlike other ad and tracker blockers, the app doesn’t use overkill third-party lists that prevent apps from working properly. Instead, taking a tried-and-tested approach from the team’s own research. The team periodically scans a range of apps in the App Store to help identify problematic and privacy-invasive issues that are fed to the app to help improve over time. If an app is known to have security issues, the Guardian app can alert a user to the threat. The team plans to continue building machine learning models that help to identify new threats — including so-called “aggressive ads” — that hijack your mobile browser and redirect you to dodgy pages or apps.

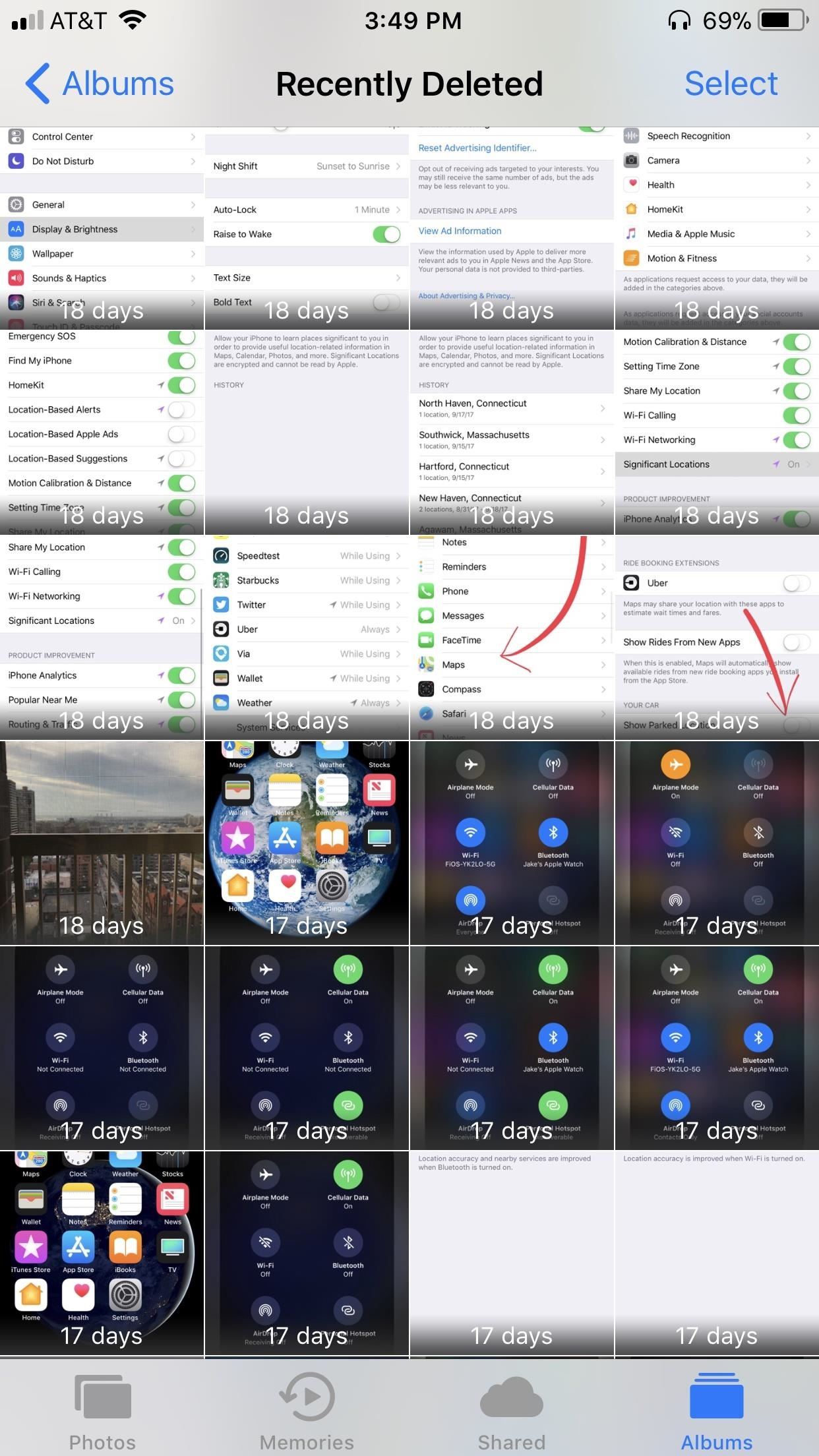

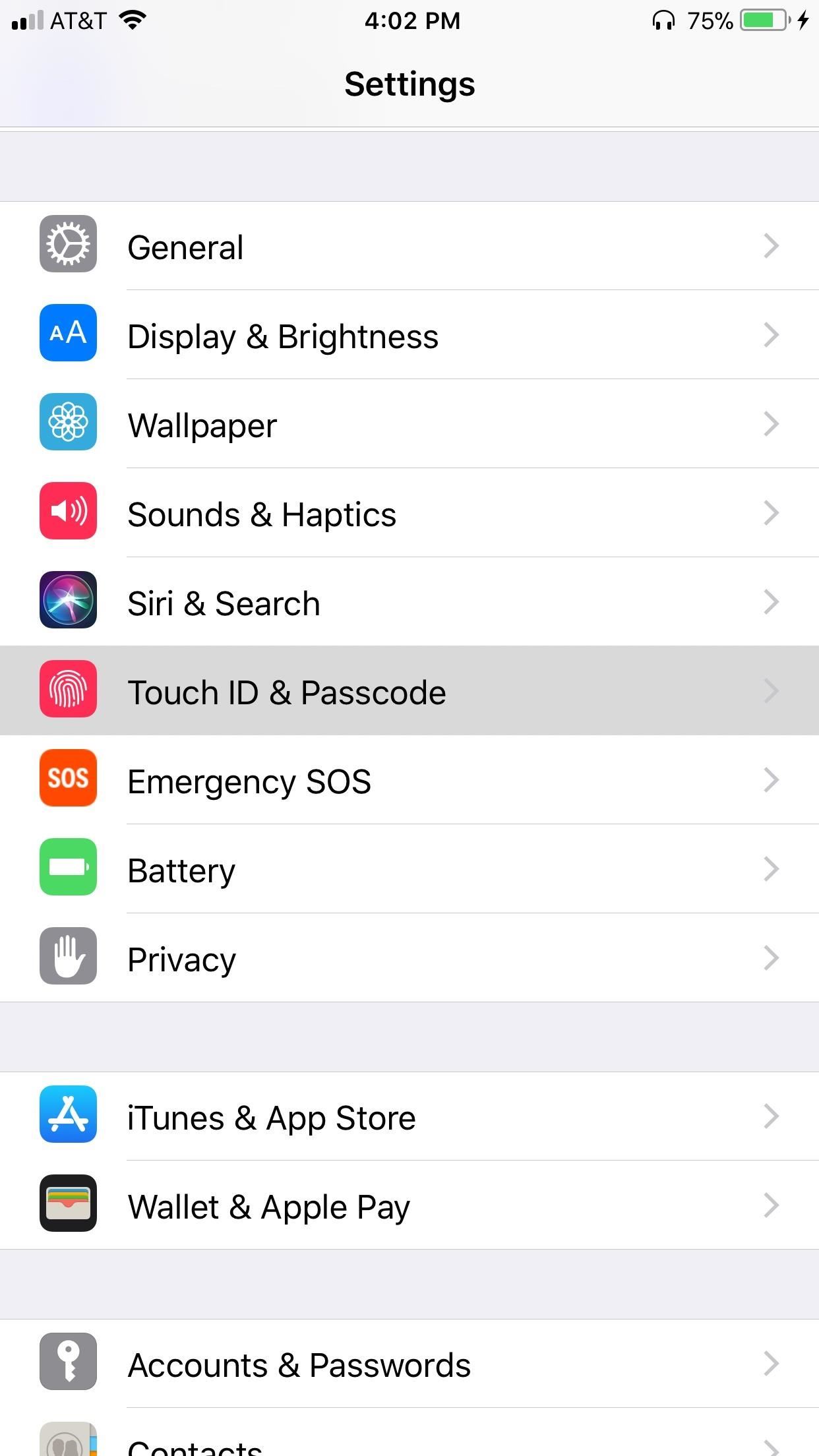

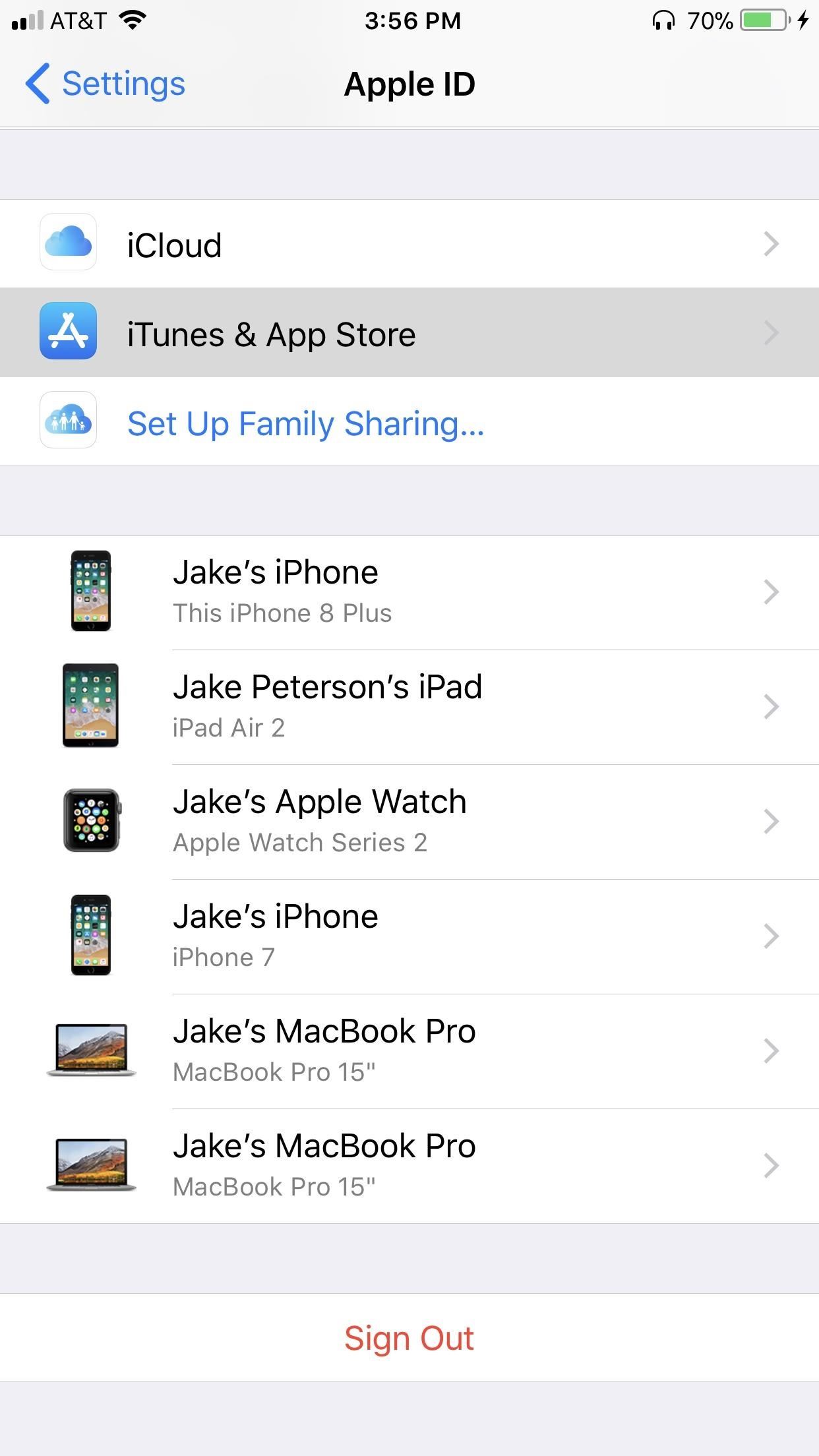

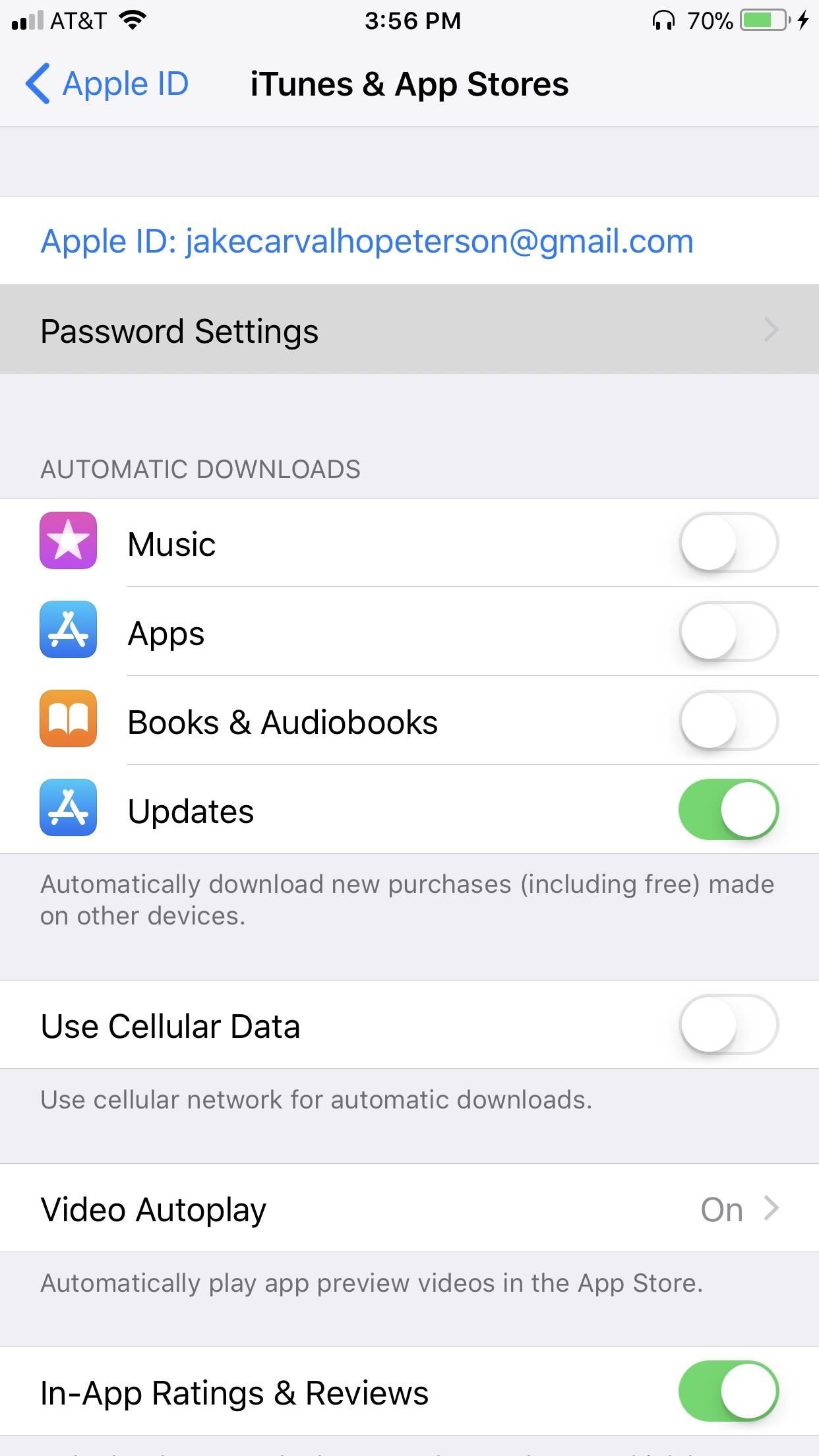

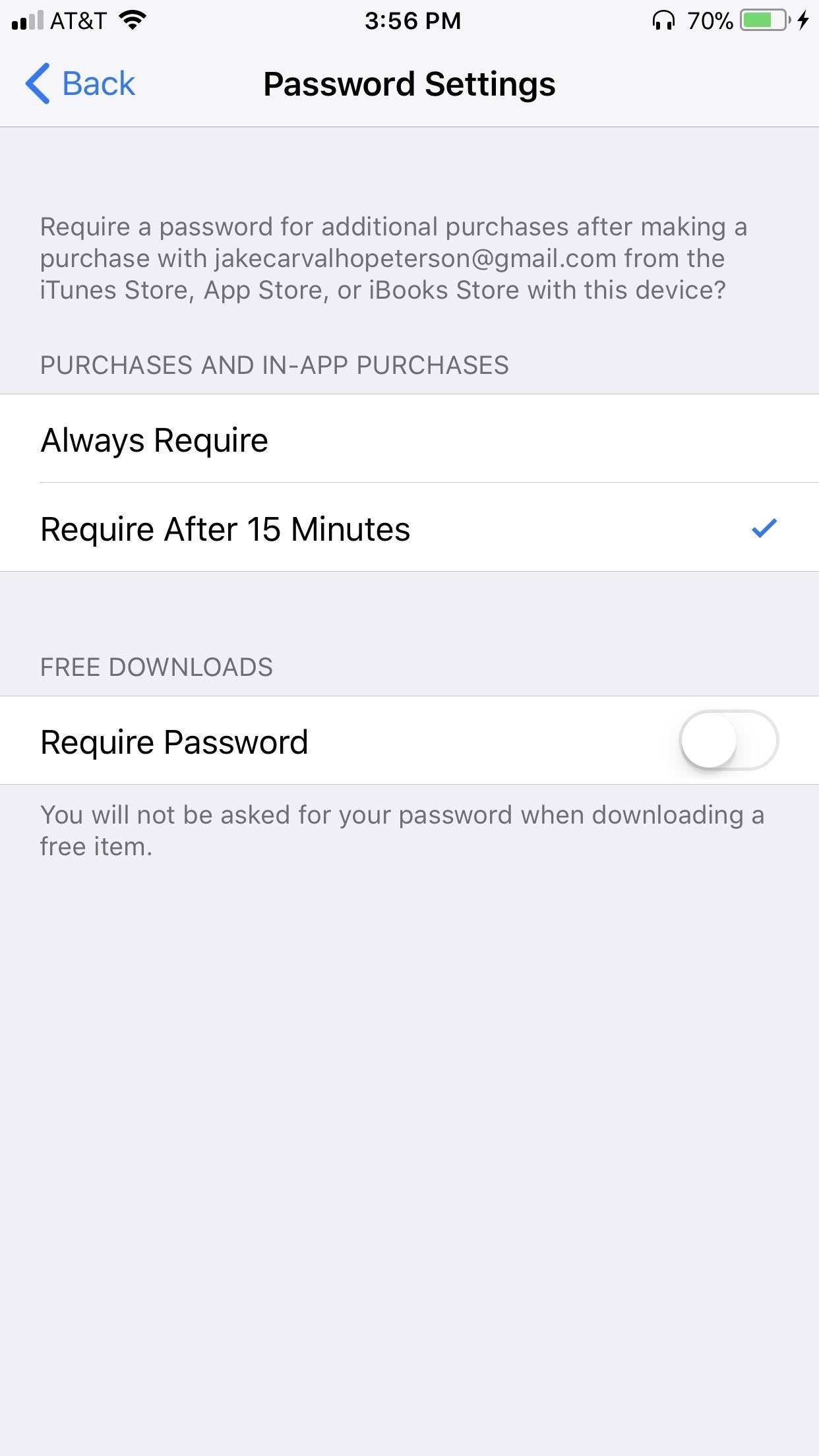

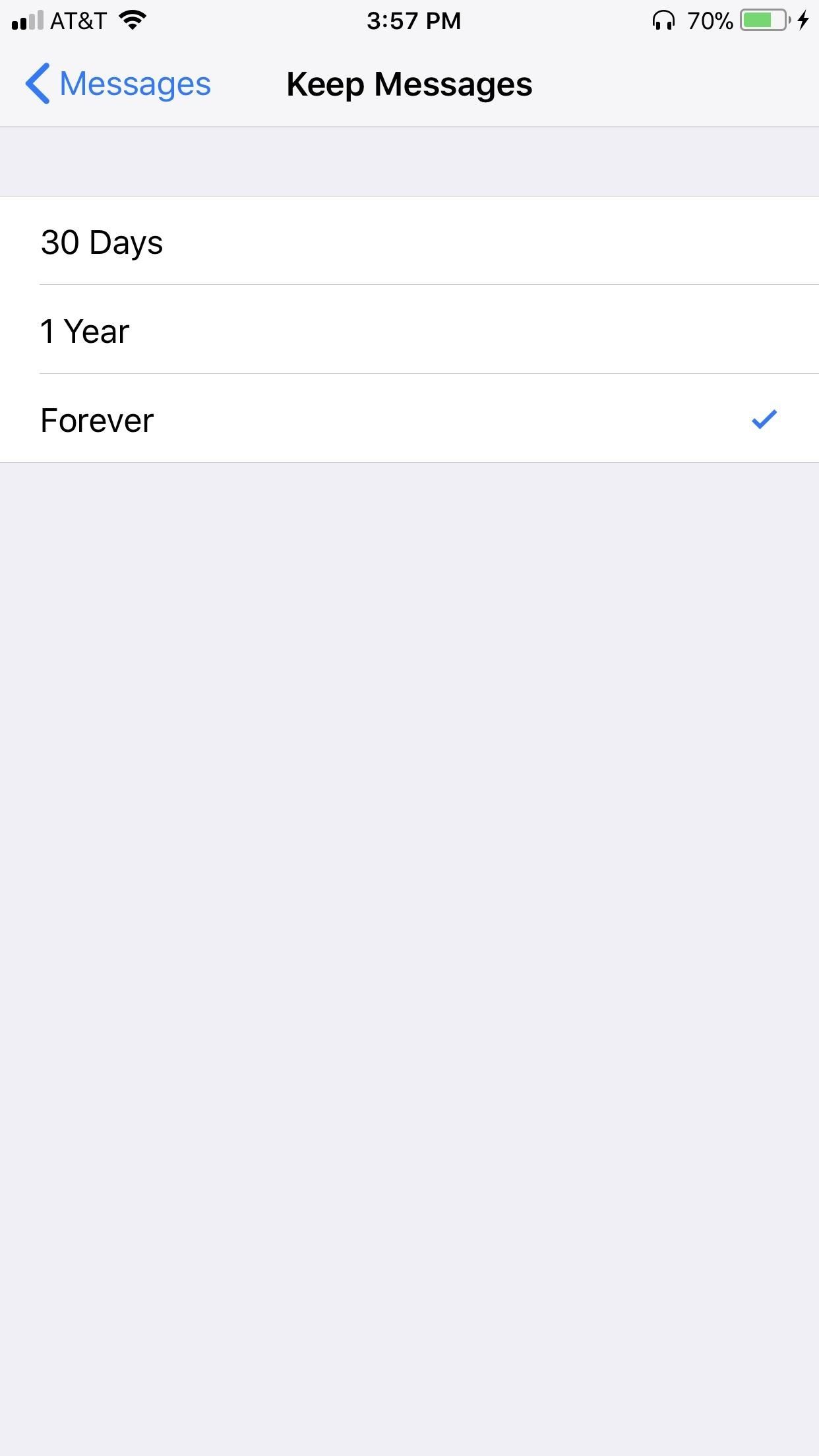

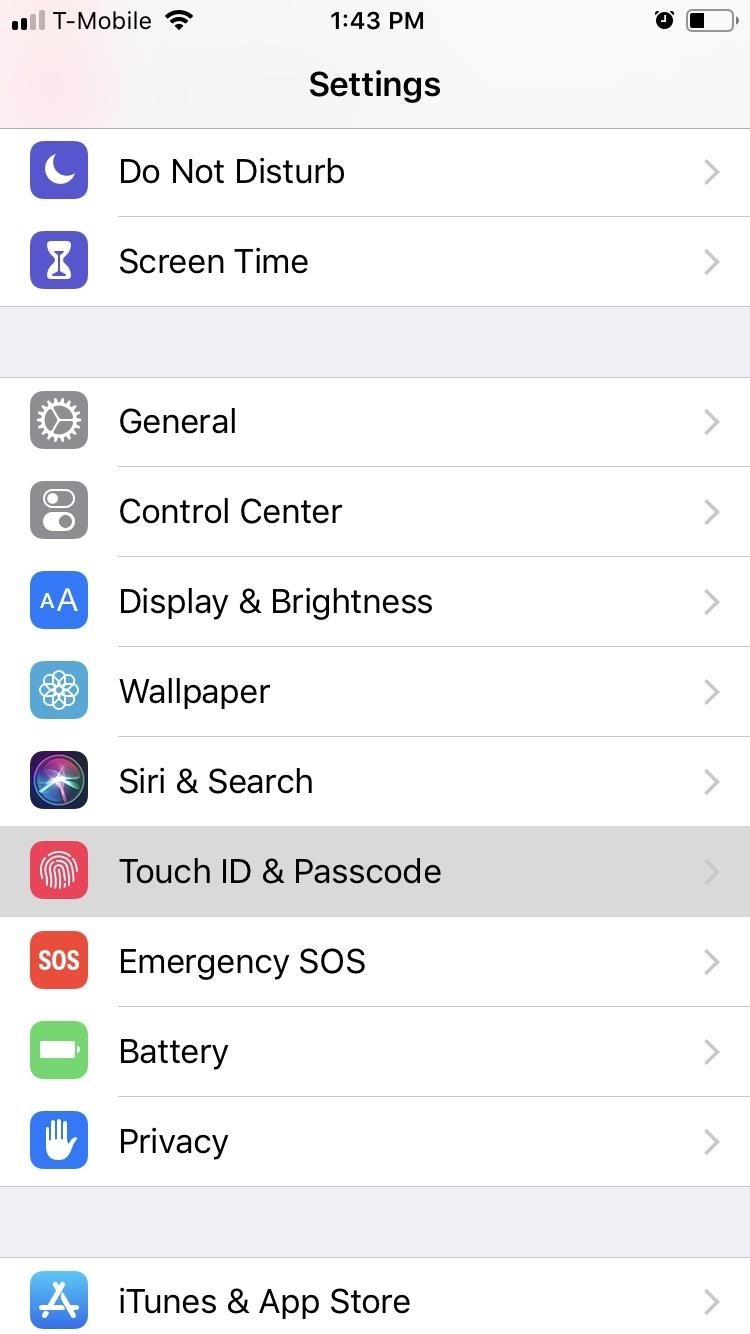

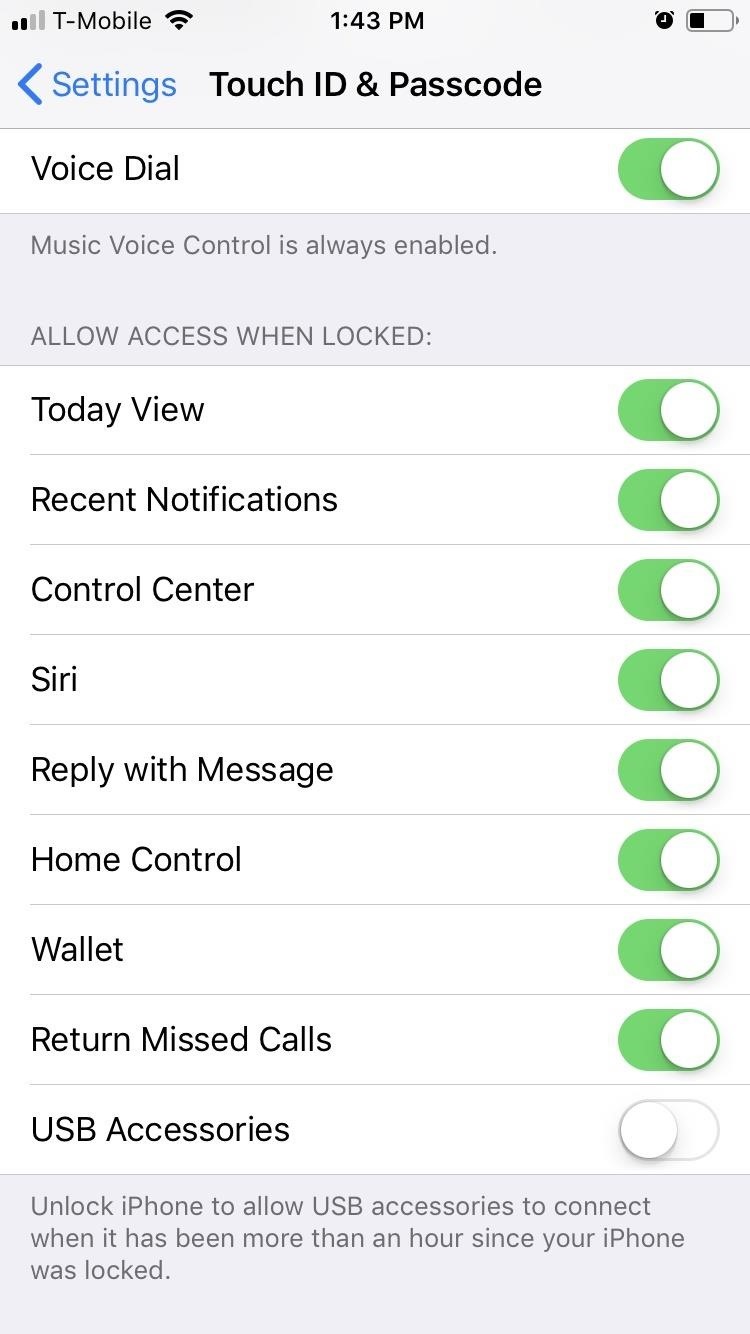

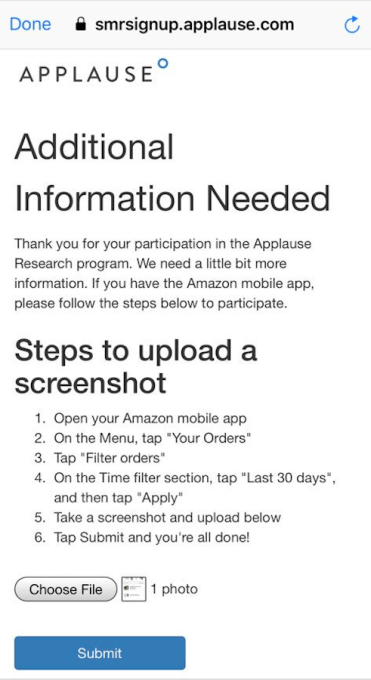

Screenshots of the Guardian app, set to be released in December (Image: supplied)

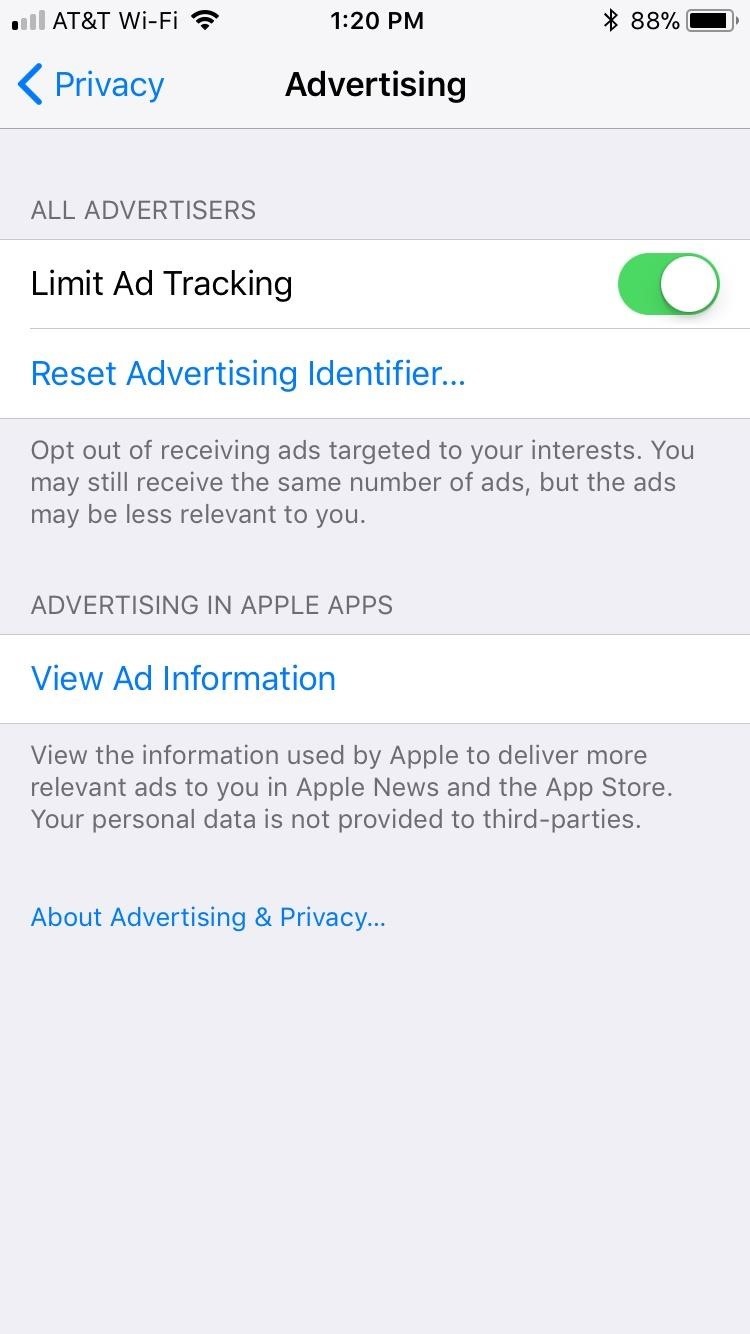

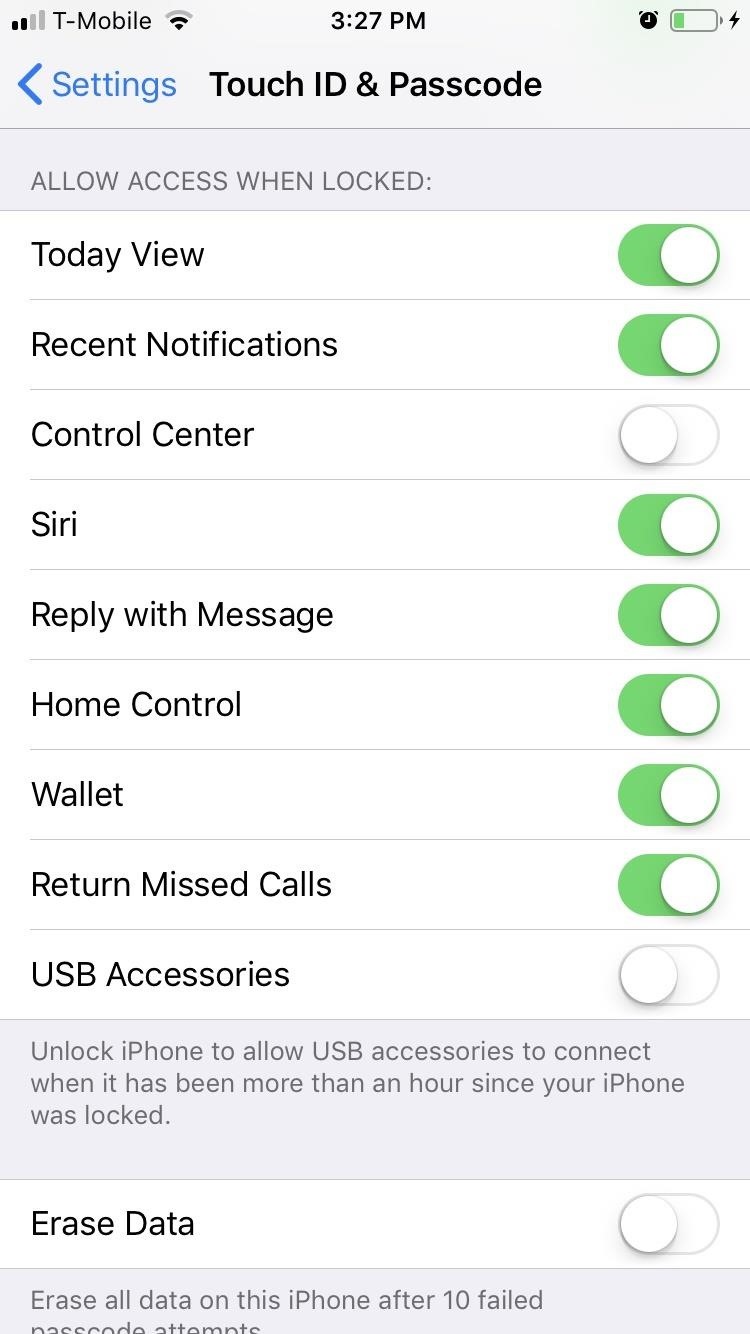

Strafach said that the app will “err on the side of usability” by warning users first — with the option of blocking it. A planned future option will allow users to go into a higher, more restrictive privacy level — “Lockdown mode” — which will deny bad traffic by default until the user intervenes.

What sets the Guardian app from its distant competitors is its anti-data collection.

Whenever you use a VPN — to evade censorship, site blocks or surveillance — you have to put more trust in the VPN server to keep all of your internet traffic safe than your internet provider or cell carrier. Strafach said that neither he nor the team wants to know who uses the app. The less data they have, the less they know, and the safer and more private its users are.

“We don’t want to collect data that we don’t need,” said Strafach. “We consider data a liability. Our rule is to collect as little as possible. We don’t even use Google Analytics or any kind of tracking in the app — or even on our site, out of principle.”

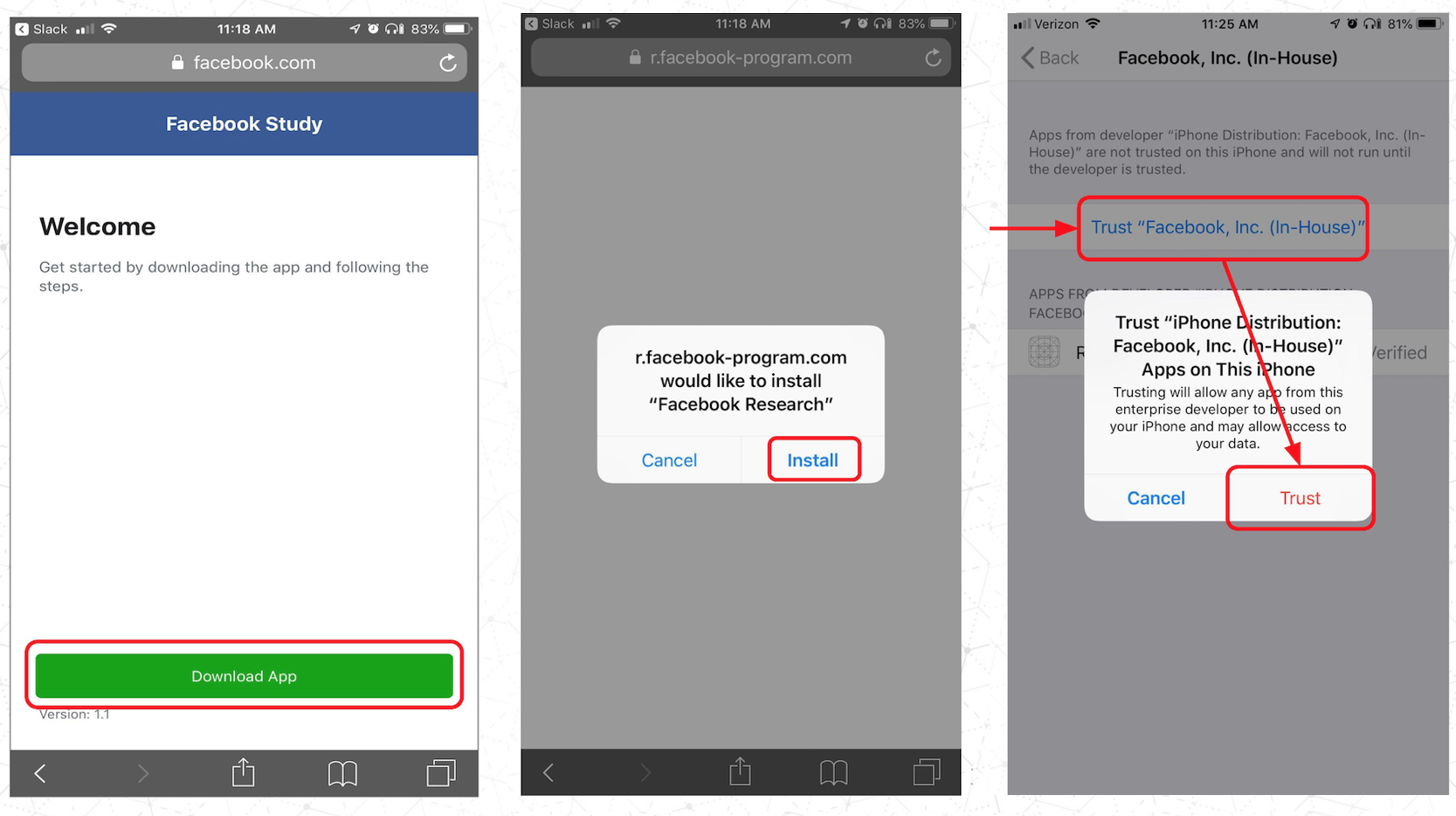

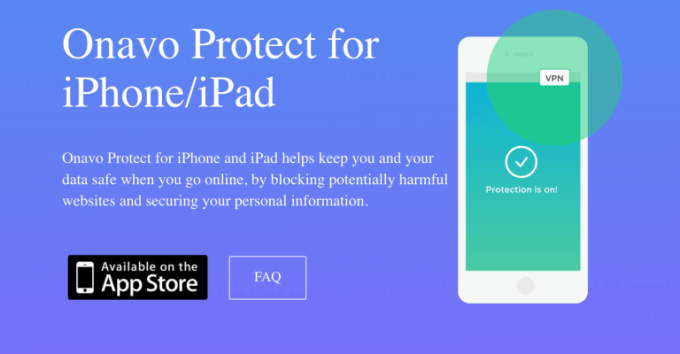

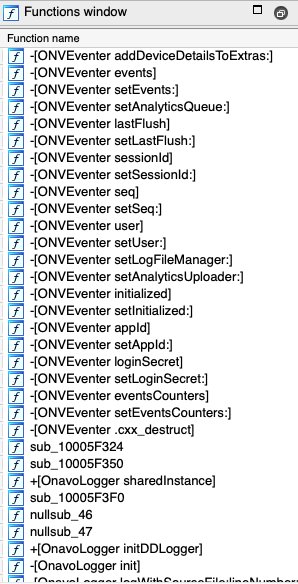

The app works by generating a random set of VPN credentials to connect to the cloud. The connection uses IPSec (IKEv2) with a strong cipher suite, he said. In other words, the Guardian app isn’t a creepy VPN app like Facebook’s Onavo, which Apple pulled from the App Store for collecting data it shouldn’t have been. “On the server side, we’ll only see a random device identifier, because we don’t have accounts so you can’t be attributable to your traffic,” he said.

“We don’t even want to say ‘you can trust us not to do anything,’ because we don’t want to be in a position that we have to be trusted,” he said. “We really just want to run our business the old fashioned way. We want people to pay for our product and we provide them service, and we don’t want their data or send them marketing.”

“It’s a very hard line,” he said. “We would shut down before we even have to face that kind of decision. It would go against our core principles.”

I’ve been using the app for the past week. It’s surprisingly easy to use. For a semi-advanced user, it can feel unnatural to flip a virtual switch on the app’s main screen and allow it to run its course. Anyone who cares about their security and privacy are often always aware of their “opsec” — one wrong move and it can blow your anonymity shield wide open. Overall, the app works well. It’s non-intrusive, it doesn’t interfere, but with the “VPN” icon lit up at the top of the screen, there’s a constant reminder that the app is working in the background.

It’s impressive how much the team has kept privacy and anonymity so front of mind throughout the app’s design process — even down to allowing users to pay by Apple Pay and through in-app purchases so that no billing information is ever exchanged.

The app doesn’t appear to slow down the connection when browsing the web or scrolling through Twitter or Facebook, on neither LTE or a Wi-Fi network. Even streaming a medium-quality live video stream didn’t cause any issues. But it’s still early days, and even though the closed beta has a few hundred users — myself included — as with any bandwidth-intensive cloud service, the quality could fluctuate over time. Strafach said that the backend infrastructure is scalable and can plug-and-play with almost any cloud service in the case of outages.

In its pre-launch state, the company is financially healthy, scoring a round of initial seed funding to support getting the team together, the app’s launch, and maintaining its cloud infrastructure. Steve Russell, an experienced investor and board member, said he was “impressed” with the team’s vision and technology.

“Quality solutions for mobile security and privacy are desperately needed, and Guardian distinguishes itself both in its uniqueness and its effectiveness,” said Russell in an email.

He added that the team is “world class,” and has built a product that’s “sorely needed.”

Strafach said the team is running financially conservatively ahead of its public reveal, but that the startup is looking to raise a Series A to support its anticipated growth — but also the team’s research that feeds the app with new data. “There’s a lot we want to look into and we want to put out more reports on quite a few different topics,” he said.

As the team continue to find new threats, the better the app will become.

The app’s early adopter program is open, including its premium options. The app is expected to launch fully in December.

Source: https://techcrunch.com/2018/10/24/smart-firewall-guardian-iphone-app-privacy-before-profits/

/cdn.vox-cdn.com/uploads/chorus_asset/file/3975956/android.0.jpg)